SlimToolkit: Optimizing Images for Efficiency and Performance

Large images not only consume more resources, but they can also slow down deployment times and introduce security risks. This is where Slim Toolkit comes into play.

Introduction

In the world of modern application deployment, Docker has become an essential tool for developers and DevOps professionals alike. It allows for the packaging of applications into containers, making them portable, consistent, and easy to deploy across environments. While Docker simplifies application deployment, it can sometimes introduce challenges related to the size of the resulting Docker images. Large images not only consume more resources, but they can also slow down deployment times and introduce security risks. This is where Slim Toolkit comes into play—a powerful toolkit designed to optimize Docker images by reducing their size while maintaining their functionality.

The Problem with Docker Image Size

One of the most common issues Docker users face is the size of the Docker images they create. Docker images are built in layers, each corresponding to a step in the Dockerfile. While this is an efficient way to manage and reuse components, it can also lead to image bloat. Large Docker images are problematic for several reasons.

First, they take up significant disk space, both in the local environment and in cloud storage or on the Docker registry. As images grow, they consume more bandwidth when being transferred between different systems, whether during builds, deployments, or updates. This can significantly slow down development cycles, particularly in CI/CD pipelines where fast iteration is crucial.

Additionally, larger images require more memory when running containers. In production environments, especially on resource-constrained systems or in cloud environments with strict resource limits, this can lead to performance degradation. Larger images also increase the attack surface of a container. By including unnecessary files, libraries, or tools in an image, the risk of security vulnerabilities rises, as each additional component adds potential vectors for exploits.

Thus, the problem with Docker image size is not just about disk space, but also about performance, efficiency, and security. Reducing image size is crucial for optimizing workflows and reducing operational overhead.

The Benefits of Using Smaller Docker Images

Reducing the size of Docker images offers significant benefits across multiple areas of software development and deployment.

When Docker images are smaller, they become quicker to build, store, and transfer. This translates directly into faster deployment times. In environments where microservices or containerized applications are frequently deployed and updated, reducing image size leads to lower overhead and quicker cycle times. Smaller images make it easier to maintain efficient CI/CD pipelines, enabling faster and more frequent releases.

From a performance perspective, smaller images use fewer resources. This is particularly important in production environments, where multiple containers may be running concurrently. With smaller images, containers use less disk space and memory, making the system more efficient overall. In the context of cloud computing, this efficiency can reduce the operational costs associated with storage and compute resources.

Moreover, smaller Docker images are inherently more secure. By stripping out unnecessary components and dependencies, the image reduces the number of potential security vulnerabilities. For instance, development tools, compilers, and debugging utilities that are often included in a typical Docker image are not needed in production and may introduce unnecessary risks. A more streamlined image reduces the "attack surface," making it harder for malicious actors to exploit vulnerabilities.

Finally, the simplicity and maintainability of smaller images cannot be overstated. With fewer layers and components, smaller images are easier to understand and maintain. Developers can more easily troubleshoot issues, update dependencies, or patch security vulnerabilities because there’s less complexity involved in the container itself.

What is Slim Toolkit?

Slim Toolkit is an open-source toolkit designed to optimize Docker images by reducing their size without sacrificing their functionality. It works by analyzing your Docker images and stripping away unnecessary files, dependencies, and libraries that are not required for the application to run. Slim Toolkit helps you create a “slimmed down” version of your original Docker image, leading to faster, smaller, and more secure containers.

The core goal of Slim Toolkit is to minimize Docker image sizes while preserving their behavior and functionality. It achieves this by intelligently identifying and removing non-essential components—those that are not used by the running application. This can include development tools, unused binaries, documentation files, and other extraneous content that might have been included in the Docker image during the build process.

By automating the optimization process, Slim Toolkit removes the guesswork from image optimization and ensures that the final image is as small and efficient as possible. This makes it an invaluable tool for any developer or DevOps professional looking to streamline their Docker containers.

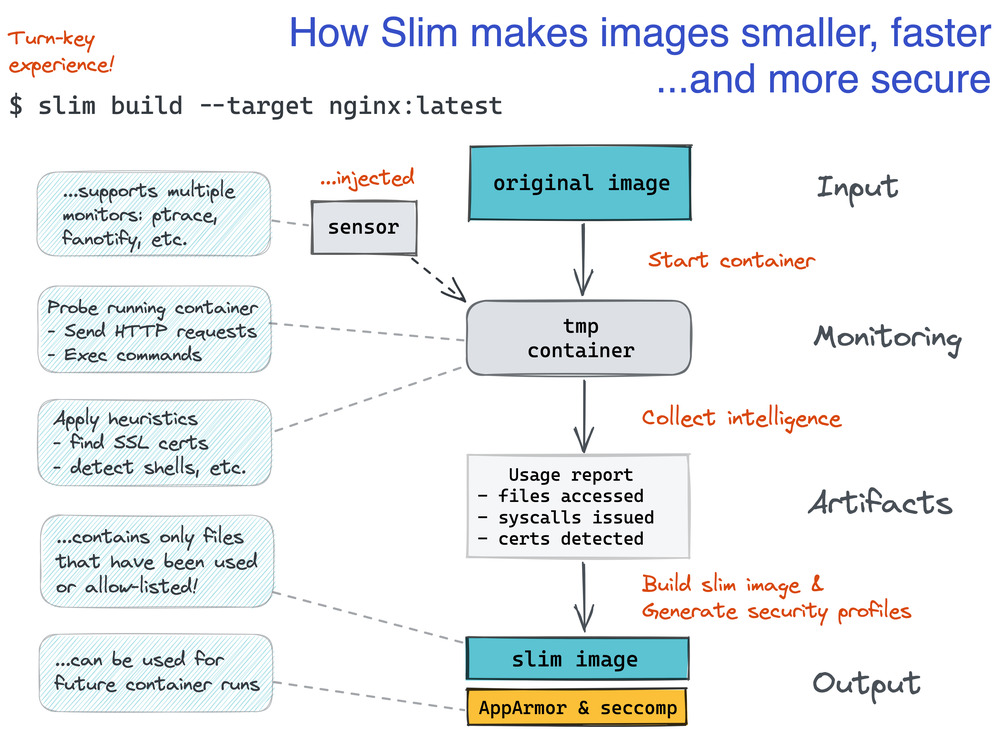

How Slim Toolkit Works

Slim Toolkit operates using a combination of static and dynamic analysis to understand the contents and behavior of a Docker image. The process can be broken down into several key steps:

- Initial Analysis: Slim Toolkit first inspects the Docker image to identify all of its layers and contents. It then begins an analysis of which files and binaries are being used by the application and which ones are not. This step involves examining the executable code in the container and identifying dependencies that are actively used at runtime.

- Stripping Unused Components: After identifying unused or unnecessary files, Slim Toolkit removes them from the image. This can include non-essential binaries, libraries, development files, documentation, and configuration files that are not required in the production environment. The tool also removes redundant or unused dependencies that may have been included during the build process.

- Rebuilding the Image: Once the optimization process is complete, Slim Toolkit rebuilds the Docker image from the remaining essential components. This process results in a much smaller image size, often reducing the image by a significant percentage.

- Testing the Slimmed Image: One of the most important aspects of Slim Toolkit is ensuring that the slimmed image behaves identically to the original. After the slimming process, the toolkit runs a series of tests to verify that the container works as expected and that no critical functionality has been removed.

Through this series of steps, Slim Toolkit makes it easy to optimize Docker images automatically, reducing image size without compromising performance or security. It’s important to note that Slim Toolkit works with a wide variety of applications, from simple ones to complex microservices-based applications.

Installation

To get started with Slim Toolkit, you first need to install it. The toolkit is available as a standalone binary and can be downloaded from its GitHub repository.

~$ curl -L -o ds.tar.gz https://github.com/slimtoolkit/slim/releases/download/1.40.11/dist_linux.tar.gz && \

tar -xvf ds.tar.gzmv && \

dist_linux/slim /usr/local/bin/ && \

mv dist_linux/slim-sensor /usr/local/bin/In conclusion, Slim Toolkit is a powerful tool that helps solve a critical problem in Docker containerization: large image sizes. By optimizing Docker images to make them smaller, Slim Toolkit enhances performance, security, and maintainability. With easy installation and usage, it’s a tool that every Docker user should consider integrating into their workflows to streamline their deployment pipeline and reduce overhead.

Slimming Your Image

Once Slim Toolkit is installed, the basic command to slim a Docker image is simple. You can run the following command to slim an image:

~$ slim build --http-probe=false \

--include-path=/usr/sbin/ --include-path=/usr/bin --include-path=/usr/lib --include-path=/var --include-path=/run --include-path=/etc/apache2 \

--target my-app:<tag> \

--tag my-app:<tag>-slim

--continue-after=10This command will automatically analyze the Docker image, strip out unnecessary components, and create a new, optimized version of the image. The slimmed image will be saved with a modified tag, and the original image will remain unchanged.

Challenges with Docker Slim

While Docker Slim is effective, it can be overly aggressive, removing essential files and dependencies that your application needs to function. This can result in broken containers.

How to Mitigate These Issues

Understand Your Dependencies: Identify which files are necessary for your application to run and ensure they are included.

Use Configuration Options: Docker Slim allows you to include critical files using the --include flag. Test Iteratively: Run your tests after slimming to identify and address missing dependencies, refining your process over time.