Not Every Application is Ideal for Cloud-Native Deployment

The appeal of Kubernetes lies in its ability to automate the management of containers, scale applications effortlessly, and provide high levels of resilience and availability. As a result, many assume that Kubernetes is the best way to deploy every application.

Introduction

The rise of cloud-native technologies has fundamentally changed how we approach application development, deployment, and scaling. Kubernetes, in particular, has become the de facto standard for orchestrating containerized applications in cloud environments. The appeal of Kubernetes lies in its ability to automate the management of containers, scale applications effortlessly, and provide high levels of resilience and availability. As a result, many companies, developers, and architects now assume that Kubernetes is the best way to deploy every application.

However, while Kubernetes and cloud-native paradigms have proven to be game-changers, not every application is ideally suited for this kind of deployment. The assumption that every application should be Dockerized and deployed on Kubernetes can sometimes lead to inefficiencies and unnecessary complexity. This is especially true for applications built with older programming languages, which were not designed with cloud-native deployment in mind. In fact, many legacy applications, particularly those built with languages such as Java, .NET, and PHP, are often better suited for more traditional deployment models, such as bare metal servers or virtual machines.

This article explores why some applications—especially legacy ones—are not ideal candidates for cloud-native deployment and why forcing them into a Kubernetes-based architecture can lead to inefficiencies. We will also discuss which applications are better suited for Kubernetes and how modern cloud infrastructure can still be leveraged to improve legacy application management without resorting to full containerization.

Understanding Cloud-Native and Kubernetes

To fully understand the challenges of deploying certain applications on Kubernetes, it is important to first understand what "cloud-native" and "Kubernetes-based" applications entail.

What Are Cloud-Native Applications?

Cloud-native applications are designed to take full advantage of cloud computing features. These applications are typically built using a microservices architecture and are packaged into containers—small, lightweight, and portable units that include everything an application needs to run, from the code itself to dependencies and libraries. These containers are designed to run consistently across various environments, whether on a developer’s machine, in testing, or in production.

The primary characteristics of cloud-native applications include:

- Containerization: Applications are packaged into containers, which make them easily portable and isolated from the underlying infrastructure.

- Microservices: These applications are broken down into smaller, independent services that can be developed, deployed, and scaled independently.

- Automation: Cloud-native apps are built to be automated from end to end, using CI/CD pipelines, monitoring tools, and scaling mechanisms.

- Resiliency: Cloud-native applications are designed to automatically recover from failures, ensuring that the system is always available.

Kubernetes, an open-source container orchestration platform, is the most widely adopted tool for managing cloud-native applications. Kubernetes automates the deployment, scaling, and management of containerized applications. It ensures that the right number of containers are running, balances load, and handles failures seamlessly by spinning up new containers when required.

Why Kubernetes Is Popular

Kubernetes is popular for several reasons:

- Scalability: Kubernetes allows you to easily scale your application by adding or removing containers based on demand.

- Portability: Kubernetes abstracts away the underlying infrastructure, allowing applications to run consistently across different environments (on-premises or in the cloud).

- Self-Healing: If a container crashes or becomes unresponsive, Kubernetes automatically replaces it, ensuring high availability.

- Managed Infrastructure: Kubernetes allows developers to focus on writing code, while the platform manages the complexities of the underlying infrastructure.

Despite these advantages, Kubernetes isn't a one-size-fits-all solution. Some applications—especially legacy ones—require a different approach.

The Case Against Containerizing Legacy Applications

While Kubernetes is an excellent fit for many modern applications, legacy applications are not always suitable candidates for cloud-native deployment. Legacy applications often have characteristics that make them ill-suited for containers and Kubernetes orchestration.

Legacy Programming Languages and Their Constraints

One of the primary reasons legacy applications don’t mesh well with cloud-native architectures is the languages they are built with. Programming languages that were designed before the cloud era—such as Java, .NET, and PHP—have certain features and limitations that complicate their deployment in containerized environments.

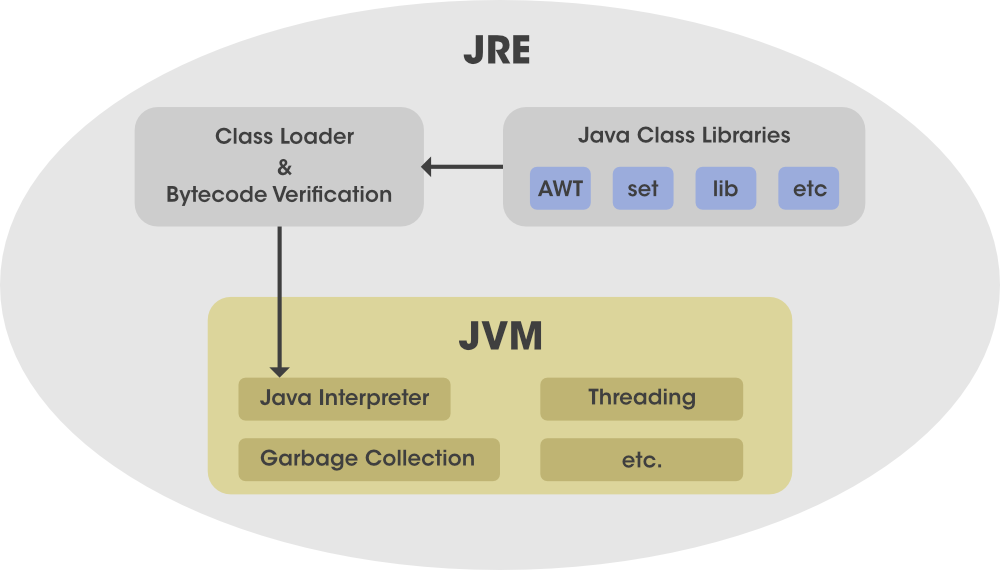

Java

Java, which has been around since the mid-1990s, was not built with containerization in mind. A typical Java application requires a Java Runtime Environment (JRE) to execute, which is relatively large compared to the runtime environments of newer languages. When a Java application is containerized, each container needs its own instance of the JRE, resulting in redundancy and wasted resources. If a company is running multiple Java-based applications in a Kubernetes cluster, each pod (container) will carry a separate copy of the JRE, resulting in an inefficient use of both disk space and memory.

Furthermore, Java applications are often monolithic and require significant configuration to run optimally, making them more difficult to manage in a distributed, microservices-based architecture. While Java can be refactored into microservices, this process requires a significant amount of work, and it might not be worth the effort for legacy applications that still perform adequately on traditional infrastructures.

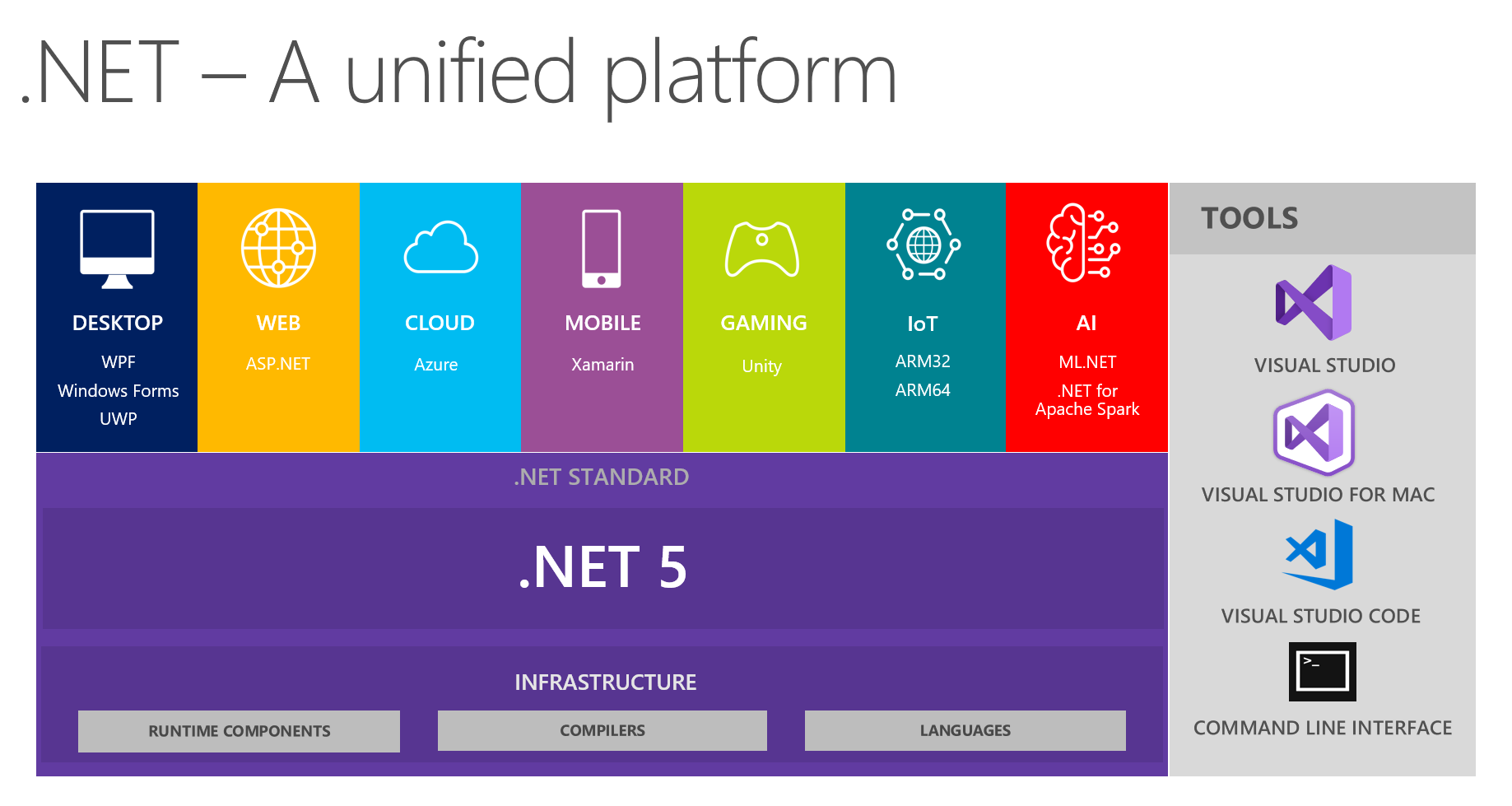

.NET

Similarly, .NET applications, which have been around since the early 2000s, are not naturally suited for cloud-native environments. Much like Java, .NET applications depend on the .NET runtime, which can be bulky and add unnecessary overhead when deployed in containers. Although .NET Core (a cross-platform version of .NET) was introduced to be more cloud-friendly, legacy .NET applications that rely on older versions of the framework are not easily containerized without considerable effort.

The need to manage large frameworks and runtimes inside each container leads to performance issues, particularly when deploying multiple instances of .NET applications in a Kubernetes environment. This can cause high resource utilization and scaling difficulties.

PHP and Other Scripting Languages

PHP, Python, and other scripting languages also present challenges when deploying in cloud-native environments. While these languages can run on a wide variety of platforms, they tend to produce applications that include many dependencies and are often large in terms of disk usage. Containerizing these applications can result in large container images, which can impact startup times, increase storage requirements, and make scaling less efficient.

In contrast, languages like Go and Rust, which were designed with cloud-native paradigms in mind, produce standalone binaries that are much more lightweight. These smaller binaries are easy to package into containers and deploy on Kubernetes with minimal overhead.

Resource Management Issues

When legacy applications are containerized, Kubernetes must allocate resources to each pod to ensure that the applications can run effectively. For example, Kubernetes may need to allocate a significant amount of CPU and memory to ensure that the JRE or .NET runtime can function properly, which can lead to inefficiencies in resource utilization. This can quickly become a problem when running a large number of containers, especially in a high-demand environment.

Resource management in Kubernetes works best when applications are designed to be stateless, lightweight, and optimized for cloud environments. Legacy applications, on the other hand, are often stateful and may require larger resource allocations, making them inefficient in Kubernetes clusters.

Cloud-Native Languages: The Better Fit for Kubernetes

While legacy languages may not be ideal for cloud-native deployments, newer programming languages like Go and Rust were designed with these architectures in mind. These languages are more lightweight and efficient, making them better suited for containerized environments like Kubernetes.

Characteristics of Cloud-Native Languages

Languages like Go and Rust, which emerged after the rise of cloud computing, are designed with cloud-native principles in mind. These languages are compiled, which means that they produce standalone, machine-ready binaries. The resulting binaries are small, self-contained, and do not require the overhead of large runtime environments like Java’s JRE or .NET’s runtime. This makes these languages ideal for cloud-native applications that are deployed in containers.

In addition to their small resource footprint, Go and Rust offer several other advantages for cloud-native applications:

- Fast Startup Times: Unlike interpreted languages, compiled languages like Go and Rust start up quickly. This is a crucial factor in a Kubernetes environment, where containers are often spun up and torn down rapidly. Fast startup times improve the overall responsiveness and scalability of cloud-native applications.

- Memory Efficiency: Both Go and Rust are designed to be memory-efficient. Go’s garbage collector is optimized for modern cloud workloads, and Rust’s ownership model ensures that memory is allocated and deallocated efficiently. This minimizes the resource overhead, making these languages ideal for running in containerized environments.

- Stateless and Scalable: Go and Rust applications are typically designed to be stateless, which is a key requirement for cloud-native architectures. Stateless applications can scale horizontally in Kubernetes, where new containers are added or removed automatically based on demand.

- Easier Deployment: Since Go and Rust produce static binaries, they are much easier to deploy in containers. There are no complex dependency trees or runtime environments to worry about, which means that containerization and orchestration in Kubernetes are straightforward processes.

The combination of small, self-contained binaries, fast startup times, and low resource consumption makes Go and Rust the ideal choice for cloud-native applications deployed in Kubernetes.

Go and Rust: The Cloud-Native Dream

Go (or Golang) and Rust are two examples of modern programming languages that are inherently suited for cloud-native deployments. Both are compiled languages that produce machine-ready binaries with minimal dependencies. Unlike Java or .NET, Go and Rust do not require complex runtime environments like the JRE or .NET Framework. Instead, they compile into standalone binaries that are small, fast, and efficient.

Go is particularly well-suited for microservices and cloud-native applications because of its simplicity and speed. It has built-in support for concurrency, making it easy to write highly scalable applications. Additionally, Go’s minimalistic design and small binary size make it an excellent fit for containerization. When you containerize a Go application, the resulting Docker image is often smaller than the base image for legacy languages, leading to faster startup times, less disk space usage, and better scalability.

Rust, known for its safety features and performance, is another great option for cloud-native development. Rust applications are compiled directly into efficient machine code, with a focus on memory safety and concurrency. Because Rust produces small and highly optimized binaries, it is a good choice for containerization and deployment on Kubernetes, especially for performance-sensitive workloads.

Both Go and Rust are designed with cloud-native patterns in mind, which means they integrate well with containerization and Kubernetes orchestration out of the box.

A Better Approach for Legacy Applications

While Go and Rust are great examples of languages that thrive in cloud-native environments, legacy applications built with Java, .NET, and PHP are often more complex to deploy and manage in Kubernetes. These applications typically require a more traditional infrastructure approach that doesn’t involve containerizing everything.

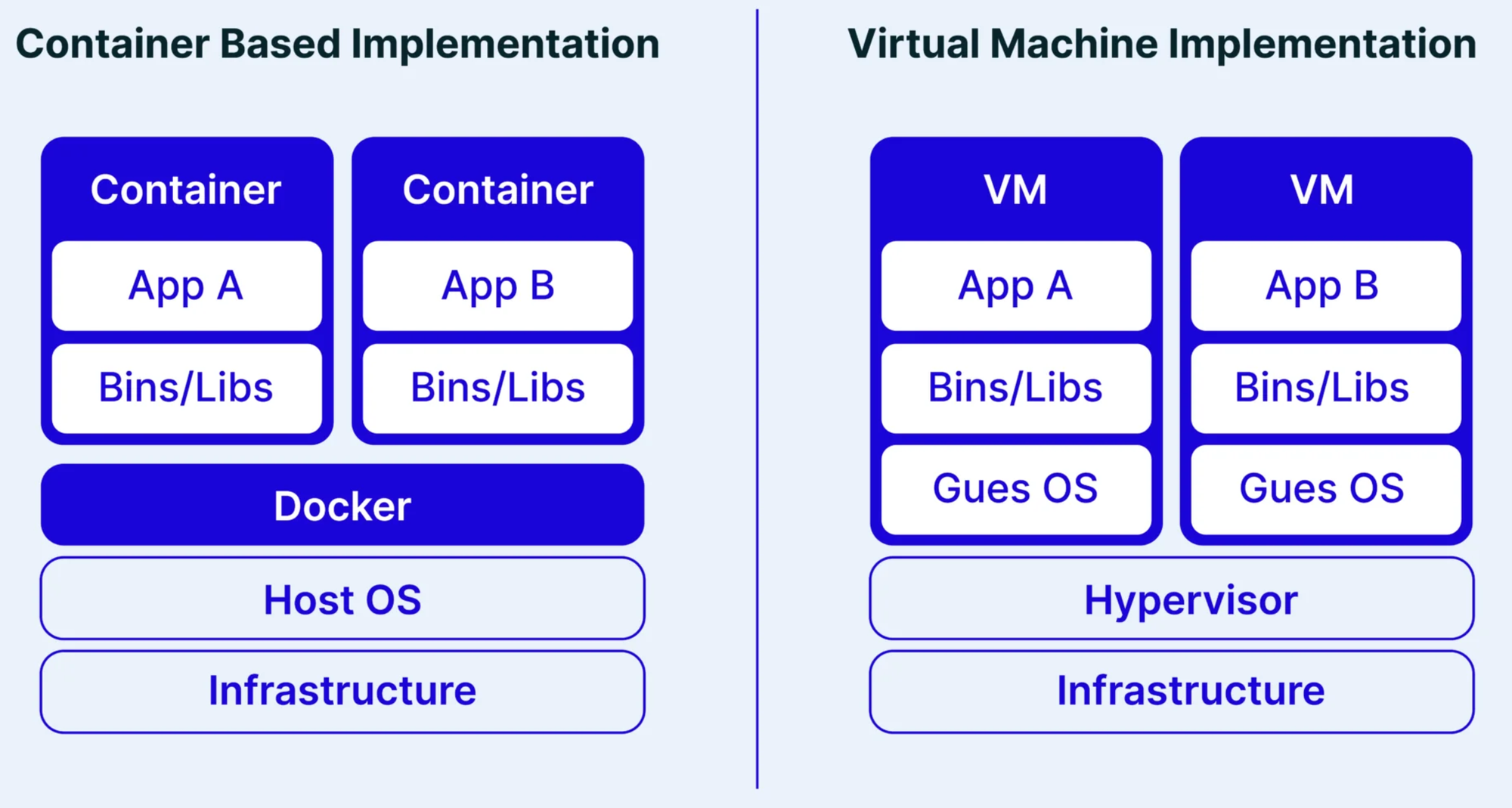

VMs and Bare Metal vs. Containers

For many legacy applications, virtual machines (VMs) or bare-metal servers are better suited than containers. VMs allow for predictable, isolated environments that can handle the complex dependencies and resource requirements of legacy applications. For example, a large-scale Java application that depends on a specific JRE or a .NET application that requires Windows-specific configurations might be more easily managed in a VM that has sufficient resources and pre-configured runtime environments.

On the other hand, bare-metal servers, though less flexible than VMs, can also be a good choice for applications that require consistent, high-performance computing. These systems provide direct access to hardware resources, which can be advantageous for resource-heavy applications.

When running legacy applications on VMs or bare metal, you have the ability to provision resources in a way that is tailored to the specific needs of the application. For instance, you can allocate large amounts of memory and CPU resources to a Java application that requires it, without worrying about the resource overhead caused by Kubernetes’ orchestration mechanisms.

Moreover, legacy applications often rely on a monolithic architecture, where the entire application is deployed as a single unit. While microservices architectures are well-suited for Kubernetes, monolithic applications can be challenging to split into multiple services. In these cases, running the monolithic application in a VM or on bare metal can simplify deployment and ensure that all parts of the application can interact with each other without the complexity of distributed systems.

Optimized Deployment for Legacy Apps

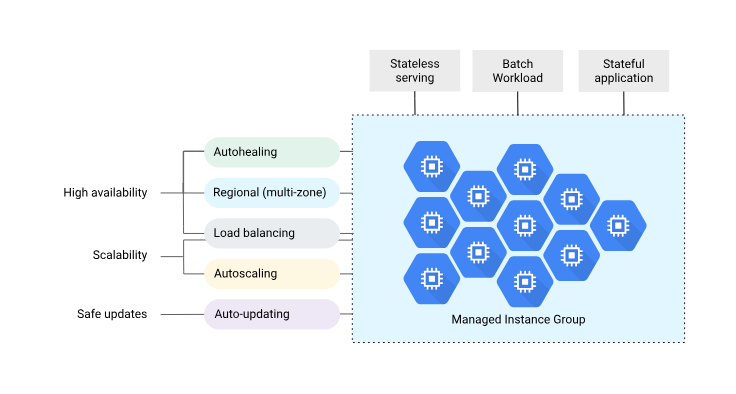

For legacy applications, especially those written in Java, .NET, and PHP, a more optimized deployment strategy may be to use traditional cloud features such as managed instances and auto-scaling.

Cloud providers like AWS, Google Cloud, and Azure offer managed instance groups, which allow for the automated scaling and management of virtual machines without the complexity of Kubernetes. These managed groups provide the ability to scale up or down based on demand, while also ensuring high availability and fault tolerance.

Additionally, by running legacy applications in virtual machines, you can take advantage of cloud infrastructure features like automated patching, security updates, and monitoring, all without needing to modify the application’s core architecture or adopt Kubernetes.

Hybrid Architectures: Combining Kubernetes and Traditional Infrastructure

For organizations that have a mix of legacy applications and cloud-native services, adopting a hybrid architecture is a practical solution. This approach allows for the use of Kubernetes for modern, cloud-native applications, while still providing a more traditional environment for legacy applications that are better suited for VMs or bare metal.

In a hybrid setup, Kubernetes can be used to orchestrate microservices and modern applications that are built with cloud-native languages like Go and Rust, while legacy applications can run in VMs or on bare-metal servers. The integration between these two environments can be achieved using APIs, messaging queues, or service meshes that facilitate communication between the cloud-native and legacy systems.

For example, an organization might run a cloud-native web frontend in Kubernetes, while a monolithic Java-based backend system runs in a dedicated VM. The two systems can interact via RESTful APIs or gRPC, ensuring seamless communication without forcing the backend into a containerized environment. This hybrid approach enables organizations to slowly transition parts of their application ecosystem to the cloud-native model while maintaining the stability and efficiency of legacy systems.

The use of service meshes like Istio or Linkerd can also help manage traffic between cloud-native and legacy services, ensuring that they can communicate with each other efficiently while retaining their respective deployment models. This makes it easier to modernize parts of the application stack without a complete overhaul.

Real-World Experience and Challenges

From real-world experience, we’ve observed that trying to deploy legacy applications like Java, .NET, and PHP on Kubernetes often results in a range of challenges. In particular, the resource consumption can be a major issue. These applications require significant CPU and memory resources, and Kubernetes’ auto-scaling features may not be sufficient to optimize resource allocation.

For instance, a Java-based web application might run well in a VM with 16 GB of RAM and 4 CPU cores, but when containerized, it could quickly consume all available resources in a Kubernetes cluster, leading to performance degradation and inefficient use of hardware. The effort to scale such applications horizontally—by adding more pods or instances—can result in diminishing returns, as the redundancy of the Java runtime in each pod eats into the available resources.

Additionally, managing these legacy applications in Kubernetes requires more than just spinning up containers. These applications often need to be configured with specific environment variables, configuration files, and external services that can complicate their deployment in a Kubernetes environment. While Kubernetes excels in managing stateless microservices, legacy applications with complex configurations and stateful behaviors require more customized solutions.

Lessons Learned and Best Practices

When deciding whether to containerize an application, especially a legacy one, it’s essential to consider the specific requirements and characteristics of the application. Here are some key lessons and best practices for managing this decision:

- Evaluate the Application’s Architecture: Assess whether the application is designed for horizontal scaling or if it is better suited for monolithic deployments. Legacy applications that were built with specific hardware or configuration requirements might be better suited to run in VMs or on bare metal.

- Consider Resource Usage: Legacy applications like Java, .NET, and PHP often require significant system resources. Running them in containers can introduce overhead, which may make traditional VM or bare-metal deployments more efficient.

- Use Managed Services When Possible: For legacy applications, consider utilizing cloud features like managed instances or Platform-as-a-Service (PaaS) offerings. These solutions can provide cloud benefits (e.g., auto-scaling, high availability) without requiring a complete refactor of the application.

- Hybrid Deployment Models: Embrace hybrid architectures that combine Kubernetes with traditional infrastructure. This approach allows you to modernize part of your application while maintaining the stability and efficiency of legacy systems.

- Don’t Force Containerization: Just because Kubernetes is a powerful tool doesn’t mean every application should be containerized. For legacy applications, it’s important to determine whether containerization provides tangible benefits or if a more traditional deployment model is a better fit.