Kubernetes 101: Pod

Pods are the smallest deployable units in Kubernetes that can be created, scheduled, and managed.

Introduction

In today's rapidly evolving digital ecosystem, businesses strive to improve their application deployment mechanisms to enhance performance, efficiency, and scalability. As part of this endeavor, Kubernetes has emerged as a critical orchestrator, simplifying the management of containerized applications. This article delves into packaging apps on Kubernetes, emphasizing Pods, the fundamental relationship between Containers, Pods, and Deployments, as well as Declarative Models and Desired State.

Definition and Use of Pods

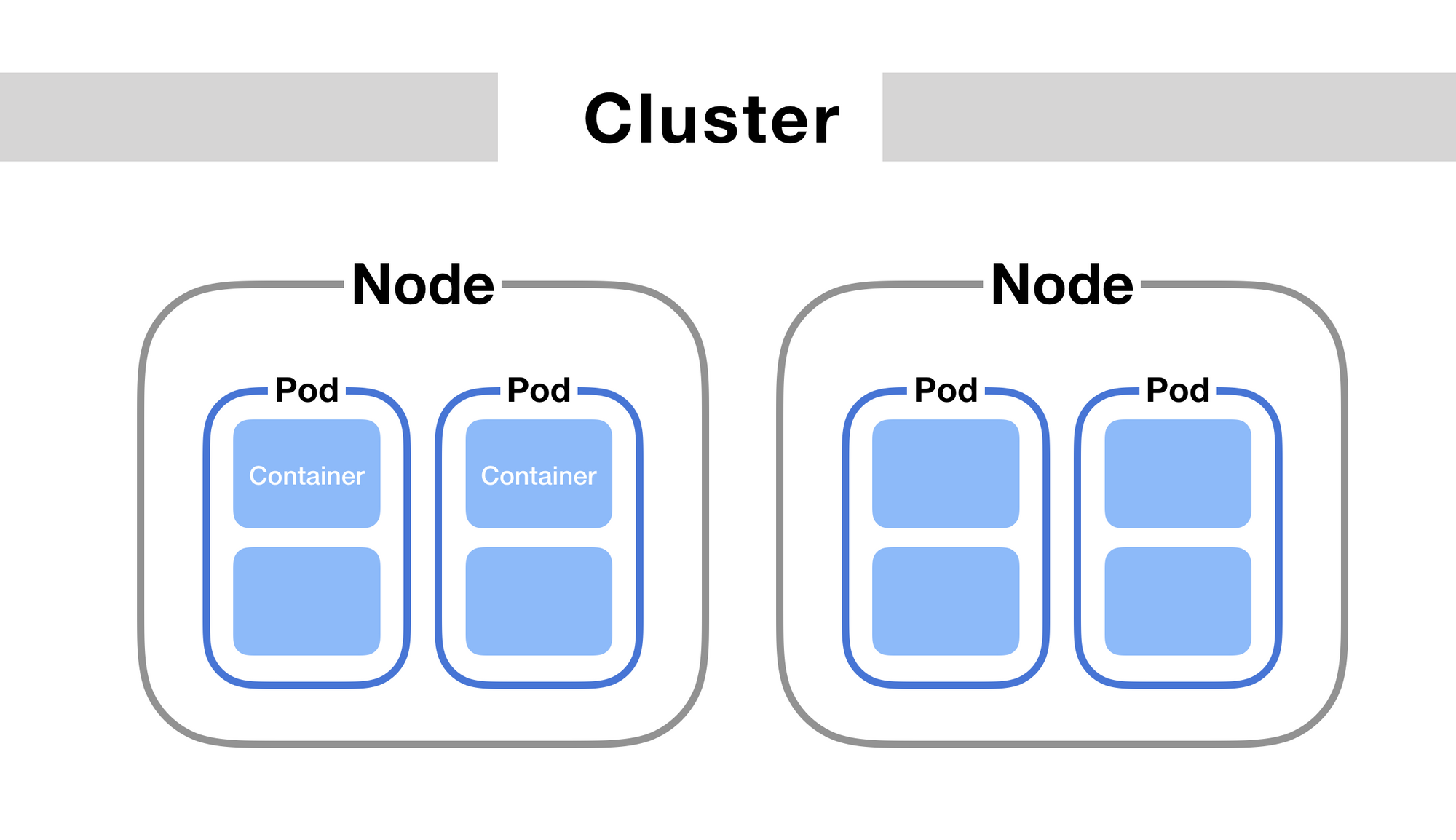

Pods are the smallest deployable units in Kubernetes that can be created, scheduled, and managed. They are groupings of containers that share resources and are always co-located and co-scheduled on the same node. The use of pods allows for easy management of applications that rely on multiple containers, ensuring that related containers are always deployed together and have access to the same resources.

Pod Architecture

Pods encapsulate an application's container (or a group of tightly-coupled containers), storage resources, a unique network IP, and options that manage how containers should run. Each Pod is meant to run a single instance of a given application. Therefore, instead of running multiple containers within a single Pod, Kubernetes advocates for running each container in its own Pod, with multiple Pods if necessary.

The Interaction Between Containers in a Pod

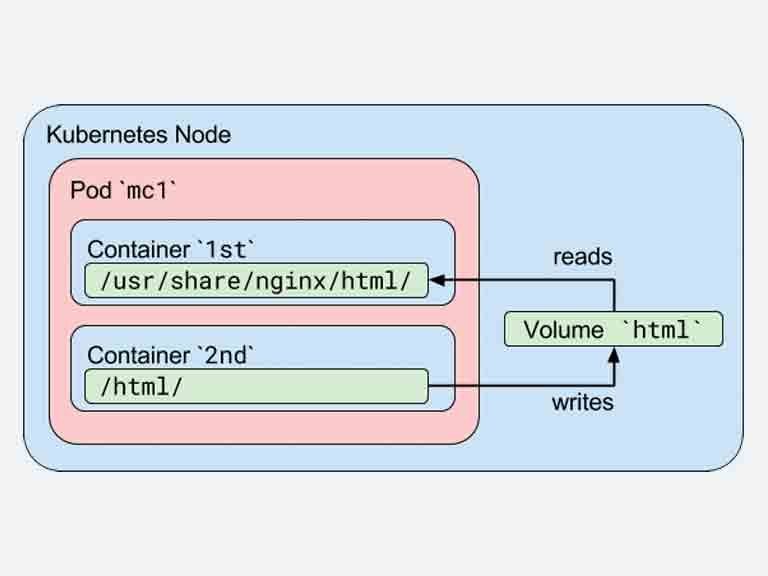

Containers within a Pod have a symbiotic relationship. They share the same network namespace, which means they share an IP address and port space, and can communicate with each other using localhost. They can also share storage volumes, which enables data to be seamlessly accessed across containers.

Although a Pod can contain more than one container, it's a common practice to have a single container within a Pod unless there's a need for co-located containers that need to work as a single unit. An example of when you might want to use multiple containers within a single Pod is when one container is the main worker and the other is a "sidecar" that supports the main worker, perhaps by populating data, proxying network connections, or managing logs.

Each container within a Pod is isolated, running in its own environment, but they share certain namespaces, which makes inter-process communication possible without the overhead of data serialization and network communication.

The Pod Lifecycle

A Pod is created with a unique ID (UID), and it maintains its UID throughout its lifecycle. Once a Pod is scheduled on a node, it remains on that node until the execution is terminated, the Pod object is deleted, the Pod is evicted due to lack of resources, or the node fails. If a node goes down before the Pod on it is deleted, the Pod is scheduled for deletion. If a node is forcefully deleted, the Pods on the node are scheduled for deletion by the node controller.

It's important to note that Kubernetes does not automatically manage or "heal" Pods. If a Pod fails or the node it is running on goes down, and the Pod is part of a higher-level construct like a ReplicaSet or Deployment, then that higher-level construct will create a new Pod to replace the failed one.

- Creation of a Pod: Pods can be created directly or indirectly. Direct creation involves using the Pod object in the Kubernetes API, whereas indirect creation involves higher-level controllers, such as ReplicaSet, Jobs, or DaemonSets.

- Running and Maintaining a Pod: Once a Pod is created, it's assigned a unique IP address and the specified containers are initialized. The Pods continue to exist until they are explicitly deleted. Kubernetes does not recreate Pods if they are deleted or if their hosting node fails.

- Deleting a Pod: When a Pod is deleted, all its containers are gracefully terminated. Kubernetes will ensure that the system adheres to the user-defined termination grace period.

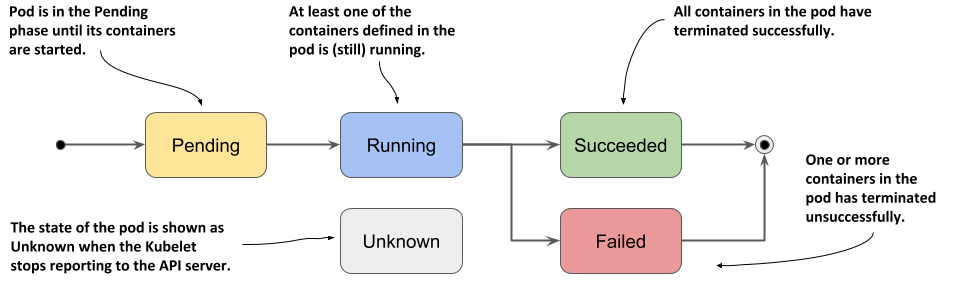

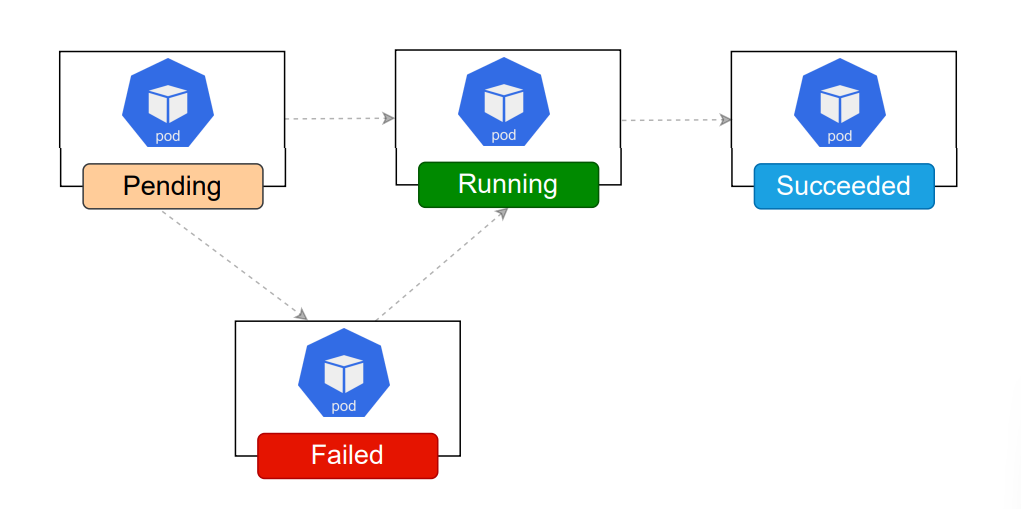

Understanding Pod Statuses and Conditions

Pod statuses provide high-level information about what is happening within a Pod in its lifecycle. It includes statuses like Pending, Running , Succeeded, Failed, and Unknown. Pod conditions include PodScheduled, ContainersReady, Initialized, and Ready which provide more granular information about the Pod status.

Pods and Kubernetes Operations

Pods are the smallest deployable units of computing in Kubernetes, and this has some significant implications for how Kubernetes operations are conducted.

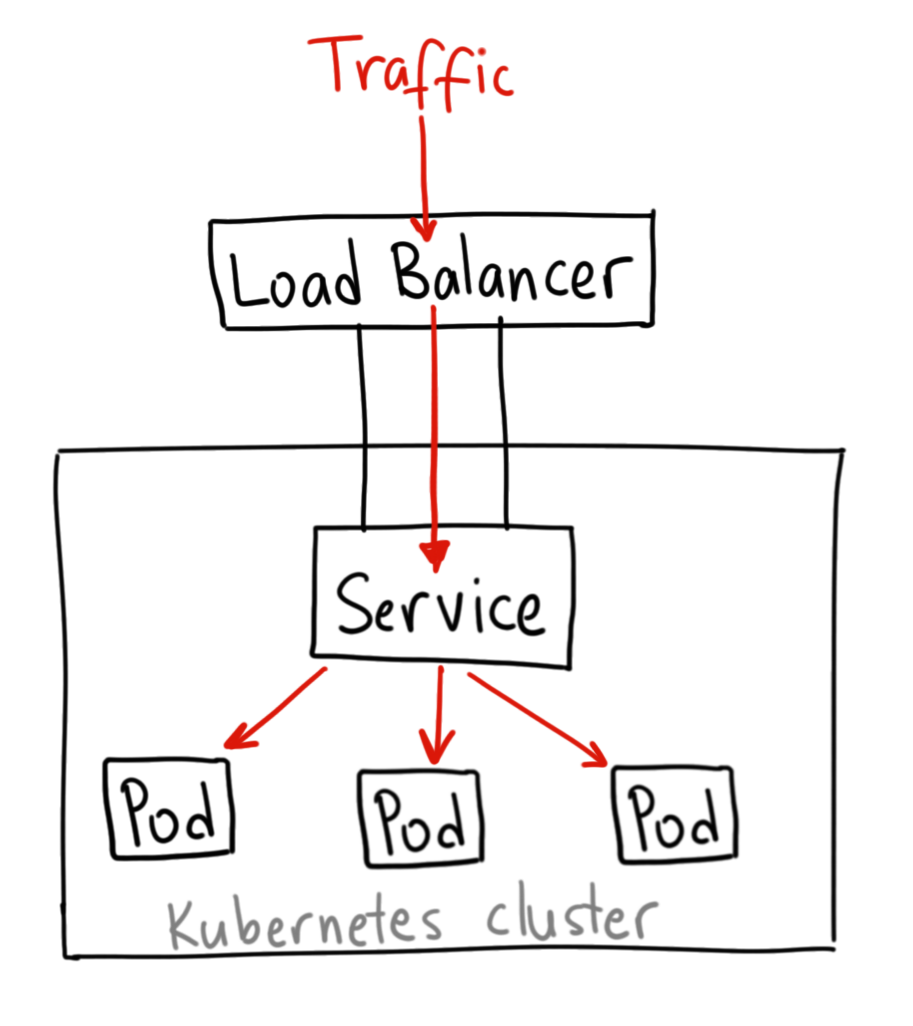

Scaling and Load Balancing

In Kubernetes, scaling (increasing or decreasing the number of replicas of an application) and load balancing (distributing network traffic evenly among a set of Pods) are performed at the Pod level. When you want to scale up an application, you don't add more containers to a Pod; instead, you add more Pods, each with its own instance of the container.

When setting up a Kubernetes Service (a stable network endpoint that routes traffic to a set of Pods), the Service doesn't route traffic to specific containers. Instead, it sends traffic to Pods, and it's the responsibility of the Pod to manage its contained containers.

Pod Scheduling

Kubernetes has a sophisticated scheduler that decides which node a Pod gets scheduled on, based on a variety of factors such as resource availability, user-defined constraints, and affinity and anti-affinity rules. Each Pod is scheduled individually, and once a Pod is running on a node, it cannot be moved to a different node unless it is killed and a new Pod is scheduled.

Health Checks

Kubernetes can also perform health checks at the Pod level, with liveness and readiness probes. A liveness probe checks whether a Pod is still running, and if it fails, Kubernetes will restart the Pod. A readiness probe checks whether a Pod is ready to serve traffic, and if it fails, Kubernetes will stop sending traffic to the Pod until it passes the readiness check again.

Importance of Pods in Kubernetes Architecture

While a Pod might seem like just another layer of complexity in the Kubernetes system, it is a fundamental part of the system's architecture. By providing a context for containers to run together with shared resources, Pods enable powerful patterns of application architecture.

Even though the containers are the ones running the actual applications, the Pod is the key operational component in the Kubernetes ecosystem. Understanding Pods, their lifecycle, and their interaction with other Kubernetes objects is crucial for effectively running applications on Kubernetes. It's the Pod that represents the "unit of deployment" in a Kubernetes system, and all scheduling and scaling operations take place at the level of Pods, not individual containers.

In conclusion, the Pod, as the smallest unit of Kubernetes operation, is an essential concept in Kubernetes, acting as the foundation for higher-level concepts like Deployments, ReplicaSets, and Services. It is, in many ways, the heart of the Kubernetes system.

About 8grams

We are a small DevOps Consulting Firm that has a mission to empower businesses with modern DevOps practices and technologies, enabling them to achieve digital transformation, improve efficiency, and drive growth.

Ready to transform your IT Operations and Software Development processes? Let's join forces and create innovative solutions that drive your business forward.

Subscribe to our newsletter for cutting-edge DevOps practices, tips, and insights delivered straight to your inbox!