K3s: Lightweight Kubernetes for Single Sever, Edge and IoT Deployments

Kubernetes has become the de facto solution for orchestrating containerized applications in cloud and data center environments. One such solution is k3s, a lightweight Kubernetes distribution developed by Rancher Labs

Introduction

Kubernetes has become the de facto solution for orchestrating containerized applications in cloud and data center environments. However, the needs of edge computing and Internet of Things (IoT) deployments, which often have limited resources and unique networking constraints, led to a demand for lightweight Kubernetes solutions. One such solution is k3s, a lightweight Kubernetes distribution developed by Rancher Labs, designed to run efficiently on resource-limited devices and environments. This article explores what k3s is, why it was created, how it differs from other distributions, and its best use cases.

What is k3s?

K3s is a fully compliant, certified Kubernetes distribution that is optimized for simplicity and low resource consumption. Designed by Rancher Labs, k3s offers a streamlined Kubernetes experience that retains essential Kubernetes features but removes components that are less critical for small-scale or edge deployments. It is packaged as a single binary, simplifying deployment and management, especially in environments where resources such as memory, CPU, and storage are limited. Despite its lightweight nature, k3s is still capable of orchestrating complex containerized applications across clusters of devices, making it a powerful tool for edge computing and IoT projects.

The Idea Behind k3s’s Creation

The need for a lightweight Kubernetes distribution became evident as companies began deploying Kubernetes on the edge and in IoT environments. Traditional Kubernetes clusters, with their reliance on multiple components and often complex setup, were not well suited for small or resource-constrained environments. Recognizing this gap, Rancher Labs developed k3s to bring Kubernetes's power to environments where ease of deployment and operational efficiency were paramount. By creating a minimal, easy-to-deploy Kubernetes distribution, Rancher Labs enabled developers to leverage Kubernetes in places previously thought impractical, such as rural areas, IoT devices, or low-power computing nodes.

Why We Need k3s

Traditional Kubernetes clusters require significant resources and a complex setup, making them challenging to deploy and maintain in environments where compute power is limited, or network connectivity is inconsistent. For edge computing applications, the focus is often on decentralization and running applications in proximity to data sources, which demands a Kubernetes distribution that is adaptable and doesn’t strain limited infrastructure. K3s is designed to overcome these obstacles, solving problems related to:

- Resource Usage: By removing unnecessary components and using lightweight alternatives (like replacing etcd with SQLite by default), k3s uses significantly less CPU and memory than traditional Kubernetes.

- Simplified Operations: K3s comes as a single binary, which drastically reduces installation complexity. It eliminates many redundant services, allowing for quick setup and lower operational overhead.

- Edge Computing and IoT Needs: K3s enables Kubernetes to operate at the edge or in IoT environments, where low latency, efficient resource usage, and resilience to intermittent connectivity are essential.

Differences Between k3s and Other Kubernetes Distributions

K3s is often compared to other lightweight Kubernetes distributions, such as MicroK8s, OpenShift, and Rancher Kubernetes Engine (RKE). Each of these has unique features and target audiences, with k3s standing out for its simplicity and low resource requirements.

K3s vs. MicroK8s

MicroK8s, developed by Canonical, is another lightweight Kubernetes distribution that focuses on ease of use and fast installation. While both MicroK8s and k3s aim to streamline Kubernetes, they have different approaches. MicroK8s uses Canonical’s “snap” packaging system, allowing users to install only the components they need. In contrast, k3s comes with predefined components (like Traefik for ingress and Flannel for networking), simplifying setup. MicroK8s generally requires slightly more resources due to its use of etcd, while k3s opts for SQLite, making it even lighter. K3s is thus often more suitable for edge computing scenarios where resource availability is highly constrained.

K3s vs. OpenShift

OpenShift, an enterprise-grade Kubernetes distribution by Red Hat, targets large organizations that require extensive DevOps tools, security features, and a robust ecosystem. OpenShift provides features such as Service Mesh, Serverless, and CI/CD integration but is resource-intensive and best suited for enterprise-level deployments with sufficient infrastructure. Unlike k3s, OpenShift is not designed for edge computing or low-resource environments. Therefore, k3s is preferred for lightweight, edge, or IoT deployments, while OpenShift is favored for robust enterprise environments with comprehensive DevOps needs.

K3s vs. RKE

RKE (Rancher Kubernetes Engine), also by Rancher Labs, is a flexible Kubernetes distribution optimized for enterprise deployments. RKE supports multi-cluster management and provides advanced configuration options, making it suitable for large-scale production environments. However, unlike k3s, RKE is not designed for low-resource environments or edge computing. K3s's single-binary architecture and minimalistic setup make it more appropriate for edge, IoT, and smaller clusters, while RKE caters to enterprise needs requiring high availability and centralized management across multiple clusters.

| Feature | K3s | MicroK8s | OpenShift | RKE (Rancher Kubernetes Engine) |

|---|---|---|---|---|

| Deployment & Installation | Single binary, lightweight, quick setup | Simple installation via snap package | Complex setup with enterprise-level tools | Flexible setup, suited for large environments |

| Resource Requirements | Minimal; uses SQLite instead of etcd by default | Moderate; uses etcd | High; includes extra tools like Service Mesh | Moderate to high; supports large-scale setups |

| Target Audience | Edge computing, IoT, small clusters | IoT, small clusters, development | Enterprises needing advanced DevOps tools | Enterprises requiring multi-cluster management |

| Included Components | Traefik for ingress, Flannel for networking | Modular add-ons as needed | Full suite including CI/CD, security tools | Customizable components based on use case |

| Best Use Case | Edge and IoT environments, CI/CD pipelines | Testing, small IoT clusters, lightweight deployments | Enterprise deployments with heavy resource requirements | Enterprise-grade, multi-cluster Kubernetes |

Use Cases

K3s excels in scenarios where traditional Kubernetes would be too resource-intensive or complex to deploy. Its lightweight design makes it ideal for edge computing, where nodes may have limited memory, CPU, and storage. In IoT applications, k3s allows organizations to deploy and manage containerized workloads on devices with modest hardware, such as sensors or single-board computers. Additionally, k3s is popular in CI/CD pipelines and testing environments, where its minimal resource requirements allow for quick and easy spin-ups of test clusters. Other ideal use cases include remote or disconnected clusters that may operate with intermittent connectivity, and environments where cost savings and operational efficiency are paramount.

Architecture

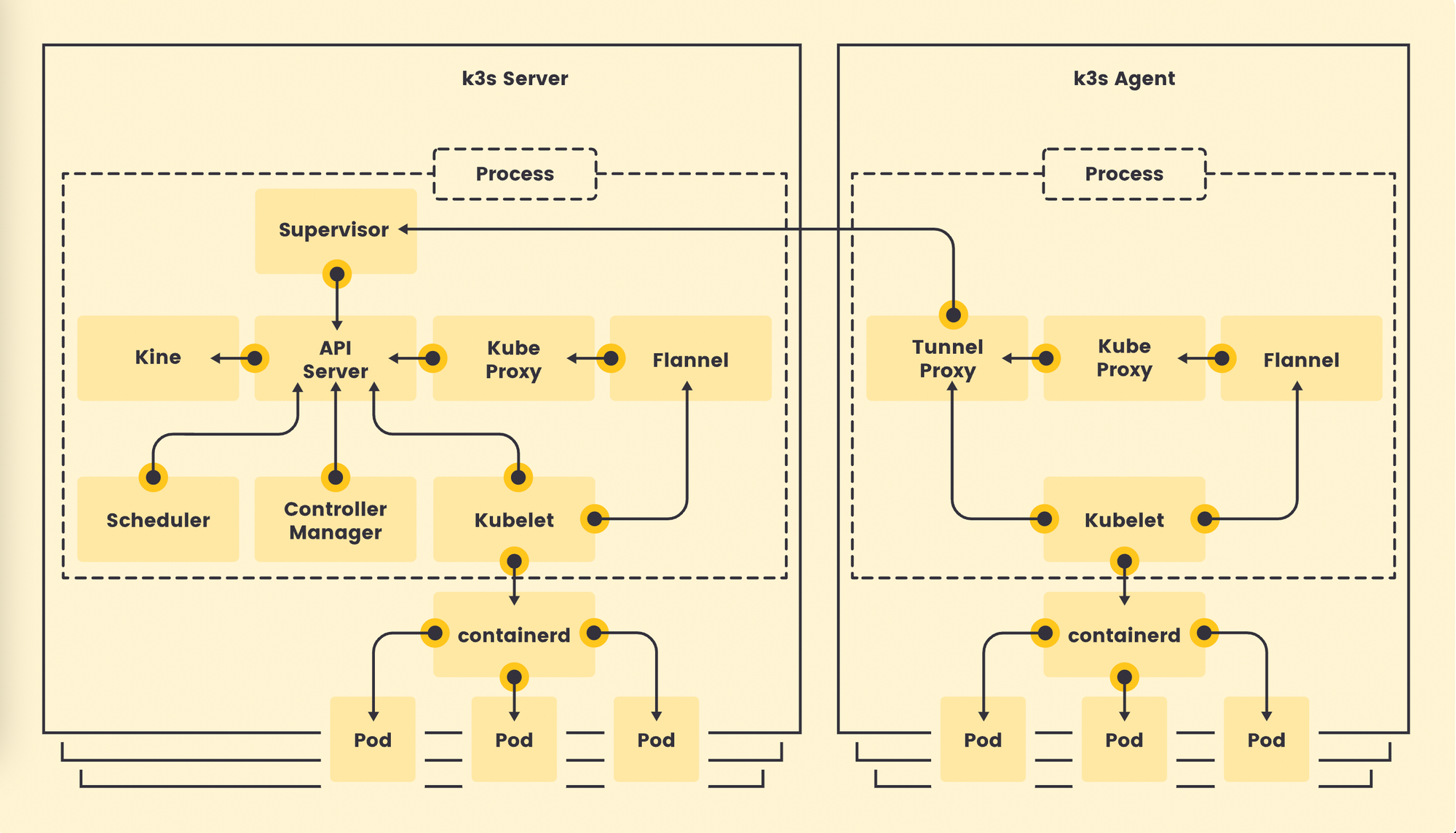

The architecture of k3s is designed to make Kubernetes lightweight, efficient, and easy to deploy in environments with limited resources. Unlike traditional Kubernetes distributions, which include multiple components and require significant computing resources, k3s streamlines the architecture by bundling core components, replacing heavy dependencies, and packaging everything into a single binary. This design is highly suited for edge computing, IoT, and other resource-constrained environments.

Here’s a breakdown of the primary components within k3s:

Single-Binary Package

K3s is packaged as a single binary that contains all the essential components needed to set up and run a Kubernetes cluster. This includes core Kubernetes components as well as network and ingress controllers. The single-binary design simplifies installation and reduces the potential for compatibility issues or misconfiguration. The binary handles installation, updates, and deployment in one package, making k3s particularly easy to use and deploy.

Embedded SQLite Datastore (Default)

Instead of the default etcd datastore found in Kubernetes, k3s uses SQLite by default, which has a smaller footprint and lower memory requirements. This choice helps reduce resource consumption, which is ideal for smaller clusters and environments with limited resources. However, k3s supports switching to etcd or an external MySQL, PostgreSQL, or other SQL databases if a more robust or highly available data store is needed, allowing flexibility for larger or more resilient deployments.

- SQLite: Lightweight, fast, and requires minimal resources. Suitable for single-node clusters or non-critical edge deployments.

- etcd or External Databases: Available as options for multi-node, high-availability setups where data redundancy and resilience are required.

Container Runtime (Containerd)

K3s uses containerd as the default container runtime, rather than Docker, to manage and run containers. Containerd is a Kubernetes-native runtime that is more lightweight than Docker and integrates seamlessly with the Kubernetes API, allowing k3s to reduce dependencies. This choice aligns with Kubernetes’ shift toward containerd as the preferred runtime, enabling k3s to remain lean without sacrificing functionality or compatibility.

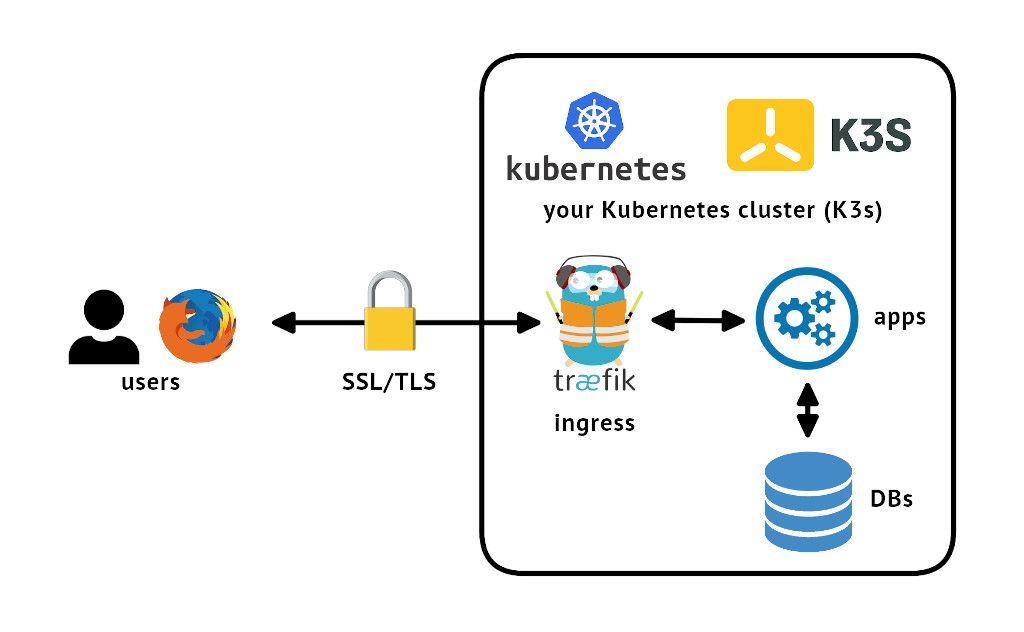

Traefik for Ingress

K3s includes Traefik as the default ingress controller, which is responsible for routing external traffic into the cluster. Traefik is a lightweight and flexible ingress controller that provides load balancing and integrates easily with k3s. By including Traefik out of the box, k3s saves users from needing to configure and deploy an additional ingress controller. However, Traefik can be disabled if users prefer to use a different ingress solution.

Flannel for Networking

K3s uses Flannel as the default Container Network Interface (CNI) plugin to manage networking within the cluster. Flannel is a simple and efficient CNI that establishes an overlay network, allowing k3s nodes to communicate with each other seamlessly. This choice keeps networking straightforward and is particularly well-suited for smaller clusters or edge deployments, where ease of setup and low resource consumption are priorities. Like Traefik, Flannel can be replaced with a different CNI if a more advanced networking setup is required.

Kube-Proxy

Kube-proxy is a standard Kubernetes component responsible for managing network rules on each node, allowing for seamless routing of network traffic within the cluster. Kube-proxy enables k3s to balance requests across multiple pods or services and ensures that traffic flows correctly, regardless of which node a pod is running on. This functionality is essential for both internal communication within the cluster and for handling external requests routed by the ingress controller.

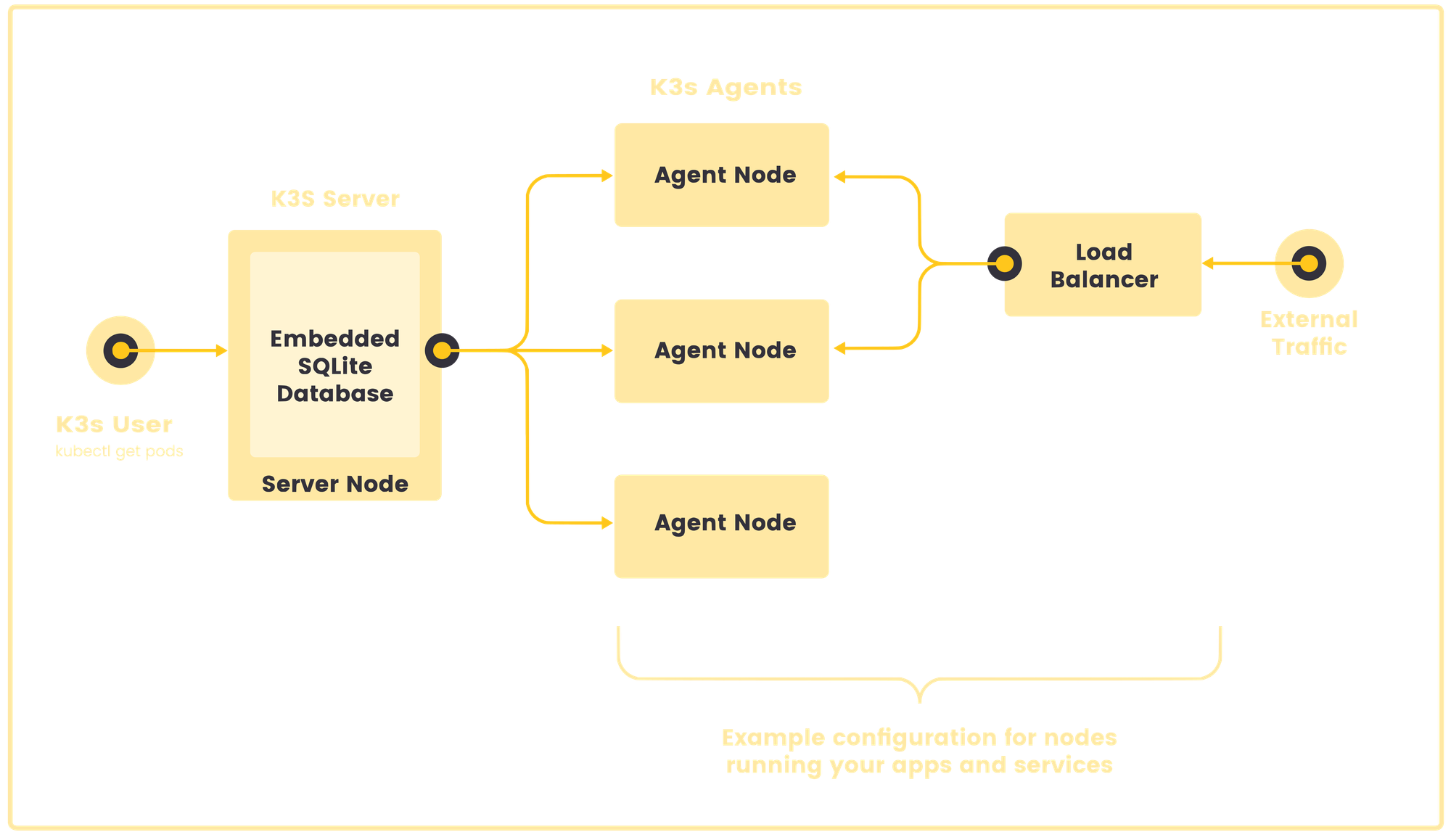

K3s Agent and Server Nodes

In k3s architecture, nodes are classified as server or agent nodes, similar to control plane and worker nodes in standard Kubernetes.

- Server Node: The server node runs the control plane, managing cluster resources, scheduling, and coordinating activity across all nodes. The server node includes core Kubernetes components like the API Server, Scheduler, and Controller Manager. Server nodes in k3s are lightweight but capable of handling most control plane duties, making k3s viable for single-node or small clusters. In high-availability setups, multiple server nodes can be configured with an external database.

- Agent Node: Agent nodes handle the workloads and run the pods, much like worker nodes in Kubernetes. Each agent node communicates with the server node to receive tasks and report status. This division allows k3s to scale horizontally by adding more agent nodes without overburdening the server node, especially in edge deployments where many nodes may be spread across different geographical locations.

Embedded Helm Controller

K3s includes an embedded Helm controller that simplifies deploying Helm charts directly within the cluster. Helm is widely used to package and manage Kubernetes applications, and by integrating Helm support, k3s enables users to install, upgrade, and manage applications in a straightforward, consistent way. The Helm controller automatically deploys Helm chart resources, which are configured declaratively, aligning with the simplicity and ease-of-use philosophy of k3s.

Optional Add-ons

While k3s is packaged to be minimal, it offers optional add-ons to expand its functionality as needed. Some common add-ons include:

- Metrics Server: For gathering and visualizing metrics about pod and node resource usage.

- CoreDNS: For internal DNS services within the cluster.

- Local Path Provisioner: A lightweight storage provider that supports persistent storage using local disk storage on nodes.

These add-ons allow users to add functionality tailored to their needs without overloading the cluster or increasing the resource footprint unnecessarily.

Capabilities of k3s

Despite its lightweight design, k3s retains most of the essential features of Kubernetes, making it a robust solution even for production-grade environments. K3s is particularly well-suited for edge computing, IoT, and other resource-constrained applications, but it doesn’t sacrifice core Kubernetes functionality. Here’s an overview of k3s’s primary capabilities:

Core Kubernetes Functionality

K3s provides all the standard Kubernetes features, including scheduling, scaling, service discovery, and self-healing. These core functionalities make it possible to manage applications in containers across multiple nodes, ensuring resilience, scalability, and effective resource utilization. Each pod in k3s operates as it would in any other Kubernetes environment, with Kubernetes handling scheduling, deployment, and lifecycle management.

Lightweight and Efficient Resource Usage

One of k3s’s most valuable capabilities is its resource efficiency. By default, k3s uses SQLite as its datastore instead of etcd, a choice that greatly reduces memory and CPU requirements. Additionally, k3s removes non-essential features, which minimizes operational overhead. This efficiency allows k3s to run smoothly on devices with limited computing power, such as single-board computers (e.g., Raspberry Pi), and makes it viable for small clusters with minimal hardware resources.

Integrated Networking and Load Balancing

K3s includes Flannel as its default Container Network Interface (CNI) and Traefik as its default ingress controller, providing built-in networking and load balancing capabilities. This setup enables easy communication between services within the cluster and allows for the routing of external traffic to the appropriate services. Additionally, Flannel’s simple overlay network ensures that nodes in the cluster can communicate seamlessly without complex configurations.

High Availability (HA) Setup Options

K3s supports high-availability configurations, making it resilient for production environments where uptime is critical. In an HA setup, multiple server nodes can be used to ensure redundancy, typically with an external database (such as MySQL or PostgreSQL) to store cluster state information reliably. This configuration allows k3s to continue operating even if one or more nodes fail, enhancing reliability for edge deployments, which may face connectivity or hardware issues.

Multi-Cluster Management

K3s is compatible with Rancher’s multi-cluster management platform, allowing users to manage multiple k3s clusters from a centralized interface. This capability is particularly valuable in edge and IoT applications, where clusters may be geographically dispersed. With multi-cluster management, administrators can monitor, control, and configure all clusters from a single dashboard, simplifying operations and ensuring consistency across deployments.

Support for Helm and Helm Controller

K3s includes a built-in Helm controller that allows users to deploy and manage applications through Helm charts directly within the cluster. Helm is a widely-used Kubernetes package manager that simplifies the deployment of complex applications by packaging them as charts, which are collections of Kubernetes resources. With k3s’s Helm integration, users can quickly install, upgrade, and manage applications, making it easier to work with large and complex applications on k3s.

Service Discovery and DNS

K3s integrates CoreDNS to provide service discovery and DNS functionality within the cluster. CoreDNS enables pods and services to find each other by name rather than by IP address, which is essential in dynamic environments where IPs can change frequently. With CoreDNS, applications can communicate with each other reliably within the k3s cluster, which is critical for microservices-based applications that rely on inter-service communication.

Support for Storage Solutions

K3s offers built-in support for storage through the Local Path Provisioner by default, allowing it to provision storage on the node’s local disk. This storage capability is particularly useful in edge and IoT deployments, where external storage solutions might not be feasible. Additionally, k3s supports other storage options, including NFS and cloud storage providers, for users who need persistent and scalable storage for applications.

Customizable Add-Ons

K3s provides a range of customizable add-ons that can be enabled or disabled based on the needs of the environment. These include:

- Metrics Server: A lightweight tool for gathering CPU, memory, and other resource usage metrics across the cluster, helpful for monitoring application performance.

- Ingress and Network Policy Support: With Traefik and Flannel as default options, k3s supports ingress routing and basic network policies, though users can replace these with other tools if their environment requires more advanced networking configurations.

- Custom CNI and Storage Drivers: K3s allows the use of alternative CNIs and storage drivers, giving users flexibility to adapt the cluster to specific infrastructure needs.

Edge and IoT Compatibility

K3s is specifically designed for edge computing and IoT deployments, offering features and efficiencies that make it well-suited for these applications. Its low resource requirements allow it to run on low-power devices, and the single-binary architecture simplifies deployment in remote or resource-limited areas. This capability makes k3s ideal for environments that need to run workloads close to the data source (e.g., sensors or local processing nodes) to reduce latency and improve efficiency.

API Server Access Control and Security

K3s offers API access control and integrates with standard Kubernetes security mechanisms, including Role-Based Access Control (RBAC) and Network Policies. These features allow administrators to secure the cluster by managing permissions and limiting traffic between pods, ensuring that only authorized users and applications can interact with sensitive resources. This security feature is essential for edge deployments where clusters may be more vulnerable to unauthorized access due to their remote locations.