GCP 101: Google Kubernetes Engine (GKE)

GKE is a managed container orchestration service by Google that's built on Kubernetes, the popular open-source container management system.

Introduction

In this article, we'll discuss what GKE is, its benefits over self-managed Kubernetes, and how it stacks up against competitors like AWS EKS and Microsoft AKS. And of course, we'll talk about its killer feature, which might just make you say, "I will use GKE to run my Kubernetes workload!" So, let's dive into the technical details, shall we?

So, what's GKE all about?

GKE is a managed container orchestration service by Google that's built on Kubernetes, the popular open-source container management system. GKE takes care of your Kubernetes clusters so you can focus on developing great applications. With GKE, you get a fully managed environment where you can easily deploy, manage, and scale containerized apps. Here's how it works:

- GKE runs Kubernetes Master, a control plane that manages your worker nodes (where your containers run) and ensures their health and connectivity.

- It uses etcd, a distributed key-value store, to store configuration data and manage the overall state of the cluster.

- GKE automatically upgrades your clusters to the latest Kubernetes version, keeping them secure and up-to-date.

GKE: Less headaches, more awesomeness

Let's take a look at some of the benefits that GKE offers, which make it stand out over self-managed Kubernetes:

- Simplified management: GKE manages the Kubernetes control plane, including the API server, etcd datastore, and core control plane components like the kube-controller-manager and kube-scheduler. This means you can focus on developing applications without worrying about infrastructure headaches.

- Autoscaling: GKE offers both horizontal and vertical autoscaling. Horizontal Pod Autoscaler (HPA) scales the number of pods based on metrics like CPU utilization or custom metrics. Vertical Pod Autoscaler (VPA) adjusts the CPU and memory resources allocated to your containers. Cluster Autoscaler, on the other hand, scales your node pools based on the demands of your workloads.

- Rolling updates and rollbacks: GKE supports rolling updates using Kubernetes Deployments, which ensures zero downtime during updates. If something goes wrong, you can roll back to a previous version of your application using the same mechanism.

The Battle of the Titans: GKE vs. AWS EKS vs. Microsoft AKS

GKE is not alone in the jungle. There are two another titans in the field of managed Kubernetes services: AWS EKS and Microsoft AKS. Here's a comparison table for GKE, AWS EKS, and Microsoft AKS:

| Feature | GKE | AWS EKS | Microsoft AKS |

|---|---|---|---|

| Ease of use | Known for user-friendly interface | Improved but less intuitive than GKE | Similar to EKS, less intuitive than GKE |

| Integration | Seamless with GCP services | Seamless with AWS services | Seamless with Azure services |

| Pricing | Pay-as-you-go, free tier available | Pay-as-you-go, no free tier | Pay-as-you-go, free tier available |

| Cluster management | Fully managed control plane | Managed control plane | Fully managed control plane |

| Autoscaling | HPA, VPA, Cluster Autoscaler | HPA, Cluster Autoscaler | HPA, Cluster Autoscaler |

| Networking | VPC-native, Alias IPs, network policies | VPC, network policies | Azure Virtual Network, network policies |

| Security | IAM, RBAC, Workload Identity, Shielded VMs | IAM, RBAC, PrivateLink, Fargate isolation | Azure AD, RBAC, Private Link, Azure Policies |

| Load balancing | Internal, External, Network, and HTTP(S) | Classic, Network, Application | Internal, External, Network, and HTTP(S) |

| Monitoring | Stackdriver, Prometheus, Grafana | CloudWatch, Prometheus, Grafana | Azure Monitor, Prometheus, Grafana |

| Logging | Stackdriver Logging | CloudWatch Logs | Azure Monitor logs |

| CI/CD integration | Cloud Build, Spinnaker, Tekton | CodeStar, CodePipeline, CodeBuild | Azure DevOps, GitHub Actions |

GKE's Killer Feature: Autopilot Mode

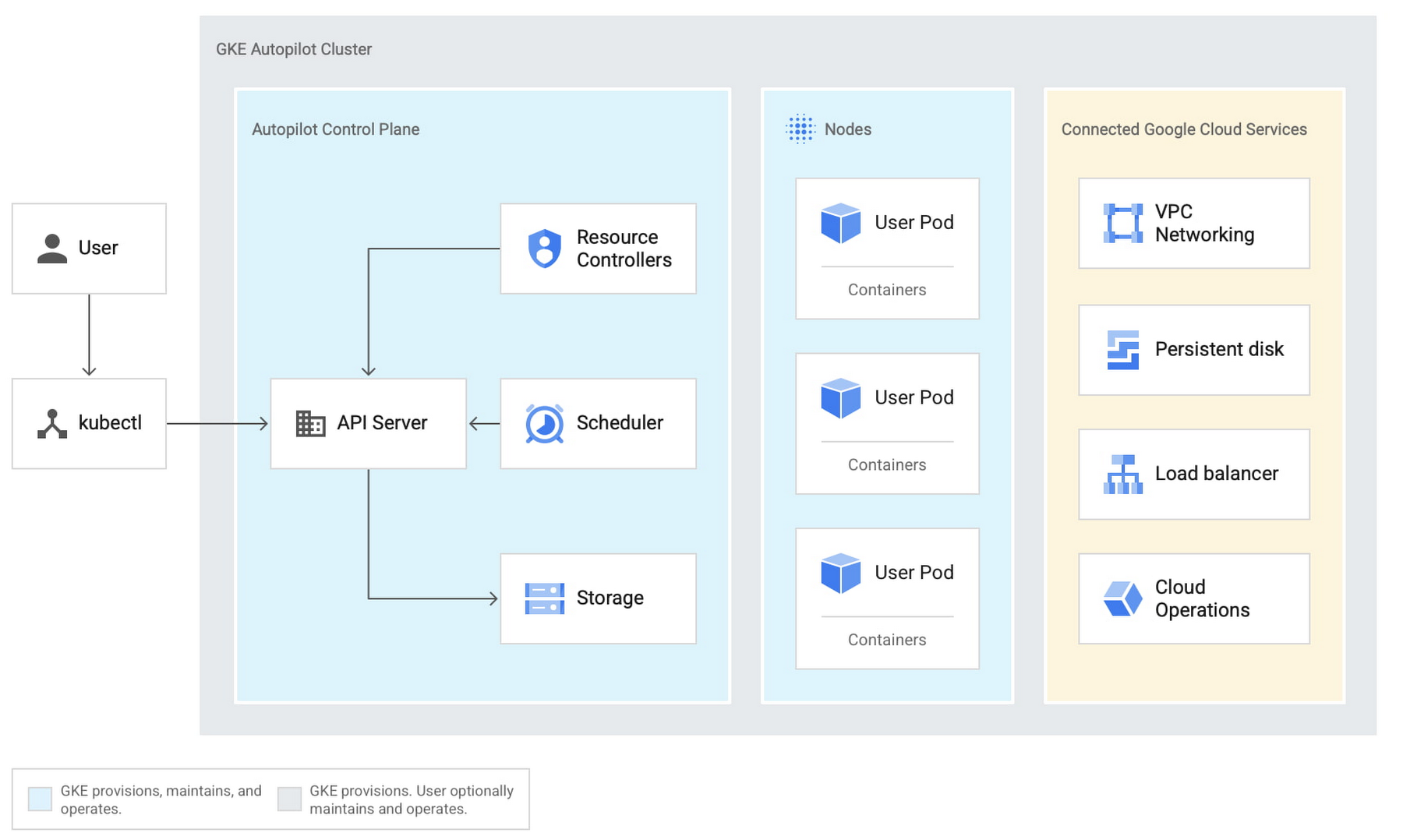

GKE's Autopilot mode is the feature that sets it apart from the competition. In a nutshell, Autopilot is a fully managed version of GKE that automatically optimizes your cluster's resources, security, and cost. Let's dive into the technical details:

- Automatic node management: When you create a new cluster in Autopilot mode, GKE provisions and manages the nodes for you. Nodes are automatically upgraded, repaired, and resized based on your workloads.

- Resource optimization: GKE Autopilot monitors and adjusts the resources of your cluster to ensure you only pay for what you use. This eliminates over-provisioning and under-utilization of resources.

- Enhanced security: Autopilot clusters are built on GKE's hardened base OS images, which include security patches and updates. Additionally, Autopilot enforces best practices like pod-level network policies, workload identity, and shielded VMs for nodes.

- Maintenance windows and exclusions: You can define maintenance windows to control when your clusters are updated. Additionally, you can set maintenance exclusions to prevent updates during critical periods.

Leveraging Google's Expertise in GKE

One of the key reasons to choose GKE as your managed Kubernetes service is Google's expertise in Kubernetes. After all, Kubernetes was created by Google engineers, who built it based on their experience with the internal container management system called Borg. Google has been running containerized workloads at scale for years, and they've learned a thing or two about how to do it effectively.

By using GKE, you're not just getting access to a managed Kubernetes service; you're also tapping into Google's knowledge and best practices when it comes to container orchestration. This expertise is evident in many aspects of GKE, such as:

- Continuous improvement: Google is one of the top contributors to the Kubernetes project, and they're continually working to improve the platform. By using GKE, you get the benefit of these improvements and can rest assured that you're working with a cutting-edge Kubernetes implementation.

- Optimized performance: GKE is designed to take full advantage of Google's global infrastructure, ensuring that your containerized applications run efficiently and reliably. With features like regional clusters, you can run your workloads close to your users, reducing latency and improving the overall user experience.

- Best practices built-in: GKE incorporates many of Google's best practices for running containerized workloads, such as using a declarative approach to manage resources, ensuring fault tolerance with multi-zonal clusters, and implementing strong security measures like workload identity and network policies.

- Strong ecosystem support: Google actively collaborates with the Kubernetes community and partners with a wide range of technology providers to ensure that GKE integrates seamlessly with popular tools and services. This means you can easily use GKE alongside your favorite container tools and platforms, such as Helm, Istio, and Knative.

So, is it time to run your infrastructure workload on Google Kubernetes Engine?

About 8grams

We are a small DevOps Consulting Firm that has a mission to empower businesses with modern DevOps practices and technologies, enabling them to achieve digital transformation, improve efficiency, and drive growth.

Ready to transform your IT Operations and Software Development processes? Let's join forces and create innovative solutions that drive your business forward.

Subscribe to our newsletter for cutting-edge DevOps practices, tips, and insights delivered straight to your inbox!