Docker: Revolutionizing Software Development and Distribution

At its core, Docker is an open-source platform designed to facilitate the process of containerization. It enables developers to package an application along with its libraries, dependencies, and other requirements within a standalone unit known as a container.

Introduction

In the ever-evolving realm of software development and distribution, Docker has emerged as a pivotal change agent. As an open-source containerization platform, Docker has broken new ground by creating an environment where applications can be developed and shipped in a standardized, efficient manner. The key advantages of Docker—portability, scalability, and resource utilization—serve as compelling differentiators in today's fast-paced technology environment.

What is Docker?

At its core, Docker is an open-source platform designed to facilitate the process of containerization. It enables developers to package an application along with its libraries, dependencies, and other requirements within a standalone unit known as a container.

These containers are lightweight and isolated, running directly on the host machine's kernel, while leveraging a technology stack—comprising namespaces and control groups—within the Linux kernel to ensure their isolation and security. This arrangement permits the running of multiple containers on a single host, each operating independently of the others.

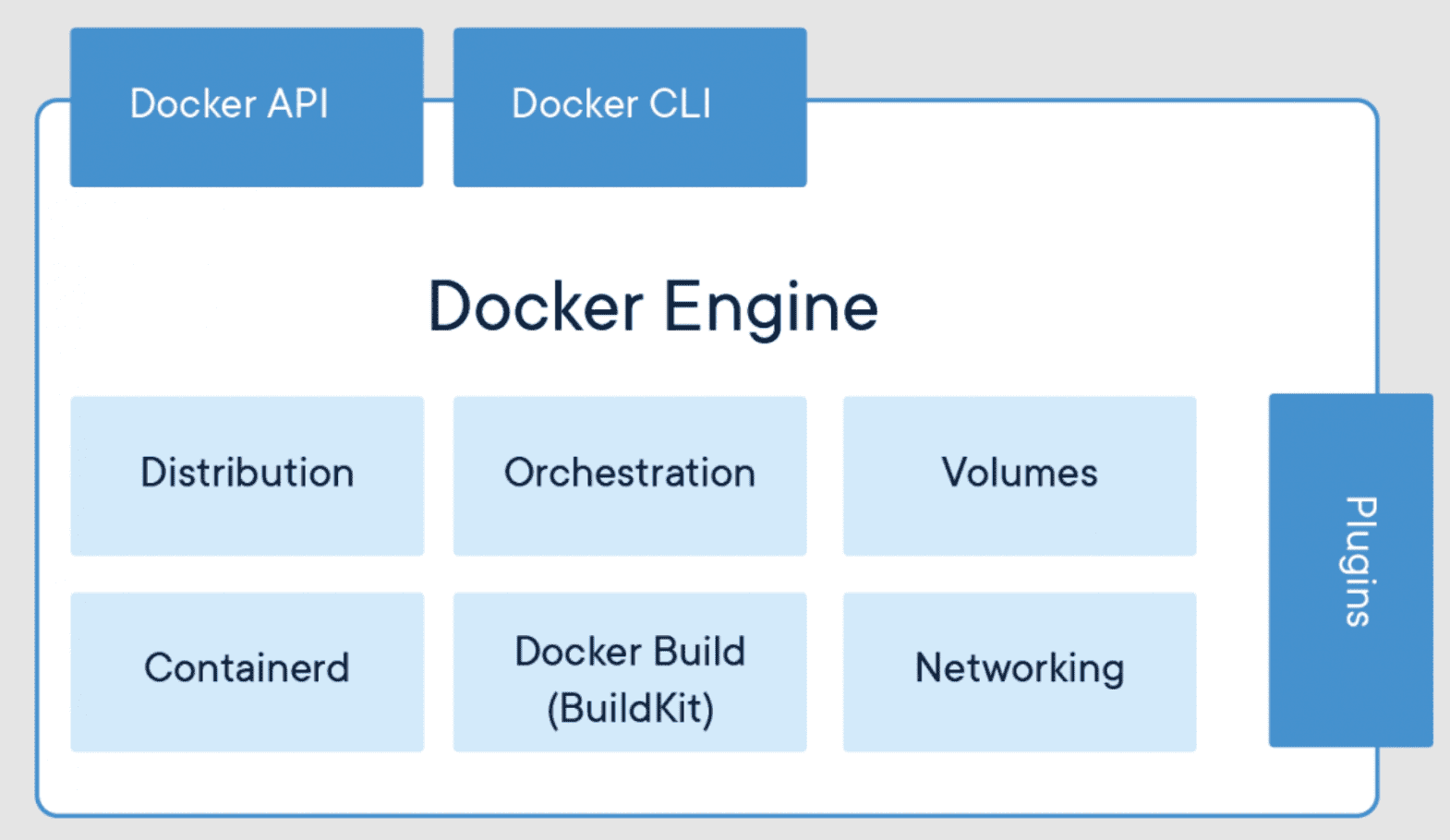

Docker's architecture consists of several components. The Docker Engine, a runtime that runs and manages the containers; Docker images, the building blocks of these containers, which are read-only templates used to create them; and Docker containers themselves, the runnable instances of Docker images.

The Open Container Initiative (OCI)

The OCI is a project under the auspices of the Linux Foundation. Its mission is to establish common standards for container technology and promote a shared set of principles and practices that ensure interoperability across different containerization platforms.

By providing a specification for container runtime and image format, the OCI has played a critical role in ensuring compatibility among varying technologies. Docker, among other major container runtime engines, has adopted the OCI standards, which significantly contributes to its flexibility and universability.

Docker's History and its Relation to Virtualization

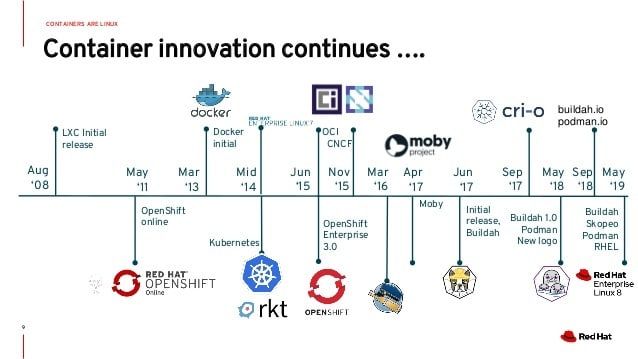

Conceived by Solomon Hykes, Docker was introduced to the world in 2013 at PyCon. Since its inception, Docker has seen widespread adoption across industries due to its innovative approach to software deployment and its striking contrast with traditional virtualization methods.

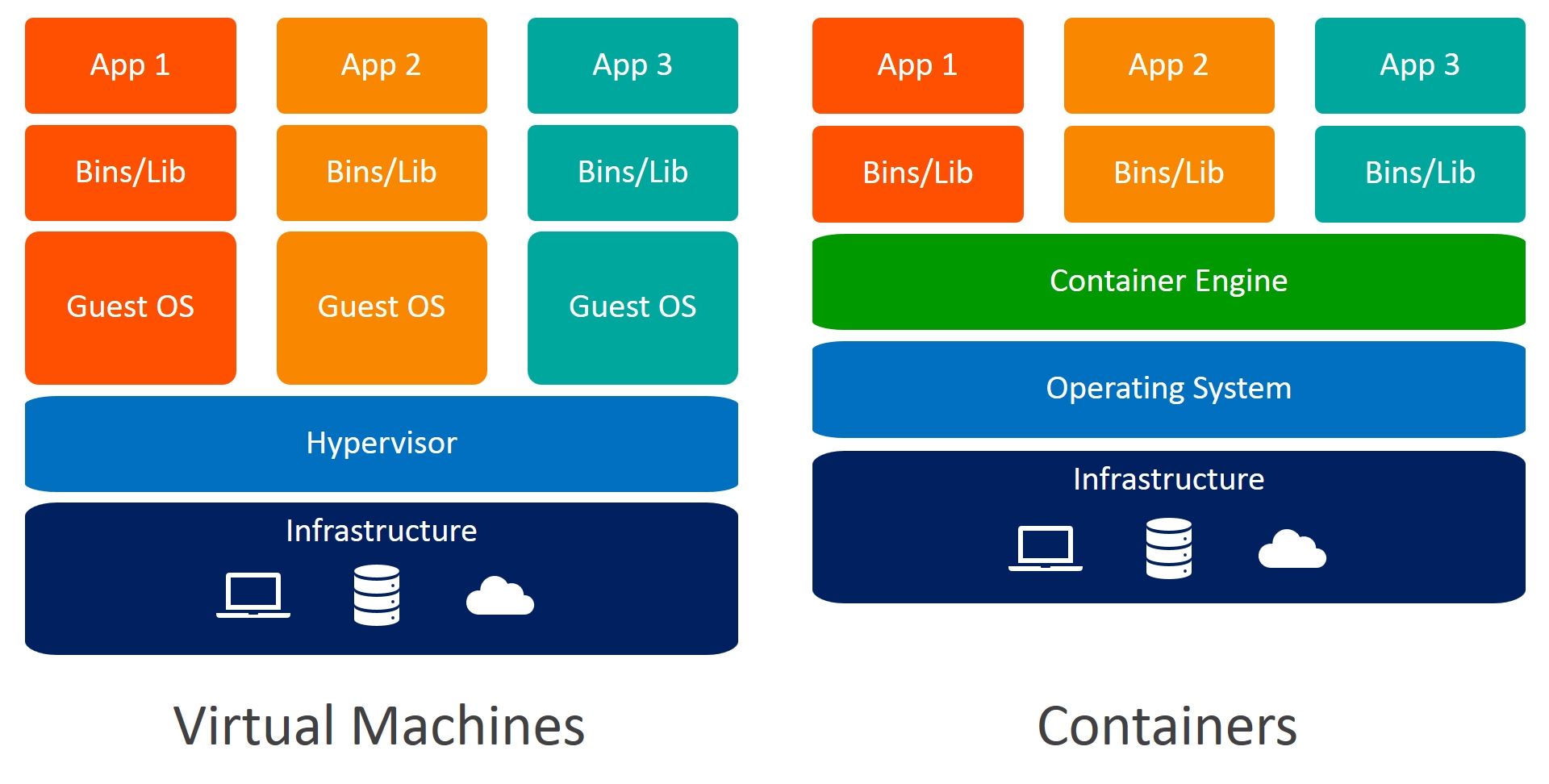

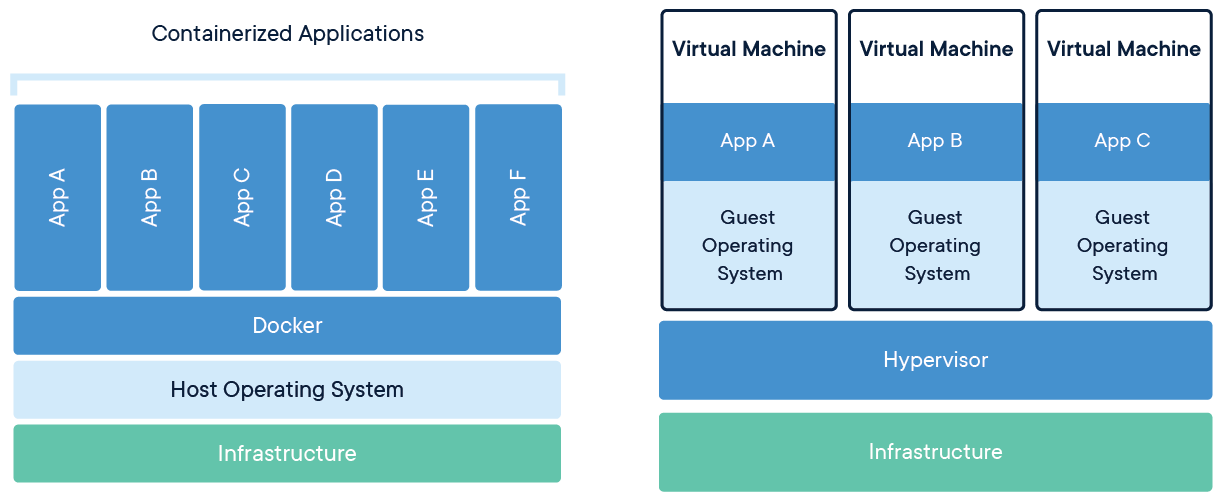

Unlike virtual machines (VMs), which require a full copy of the host operating system plus the application and its dependencies, Docker containers share the host OS kernel and include only the application and its dependencies. This results in a substantially lighter, more efficient form of application deployment and execution. The comparative advantage of Docker's approach includes reduced overheads, faster startup times, and less resource consumption.

Benefits and Distinctions: Docker vs. VMs

Docker offers a number of advantages over traditional VMs. It delivers superior performance because containers share the host OS, eliminating the need for a hypervisor and hence reducing overhead. This allows for a much higher density of applications on a single host compared to VMs, leading to significant cost savings.

In terms of resource utilization, Docker’s shared-kernel model is far more efficient than the full virtualization approach of VMs. Each Docker container runs as an isolated process on the host OS, consuming less memory and starting much faster than a VM. Docker also has the ability to limit the amount of resources each container can use, preventing a single container from monopolizing the host’s resources.

In addition, Docker provides rapid provisioning (containers can be started in seconds), easy scalability (more containers can be added as required), and simplified management (thanks to Docker's declarative approach to container configuration), all of which contribute to its growing popularity.

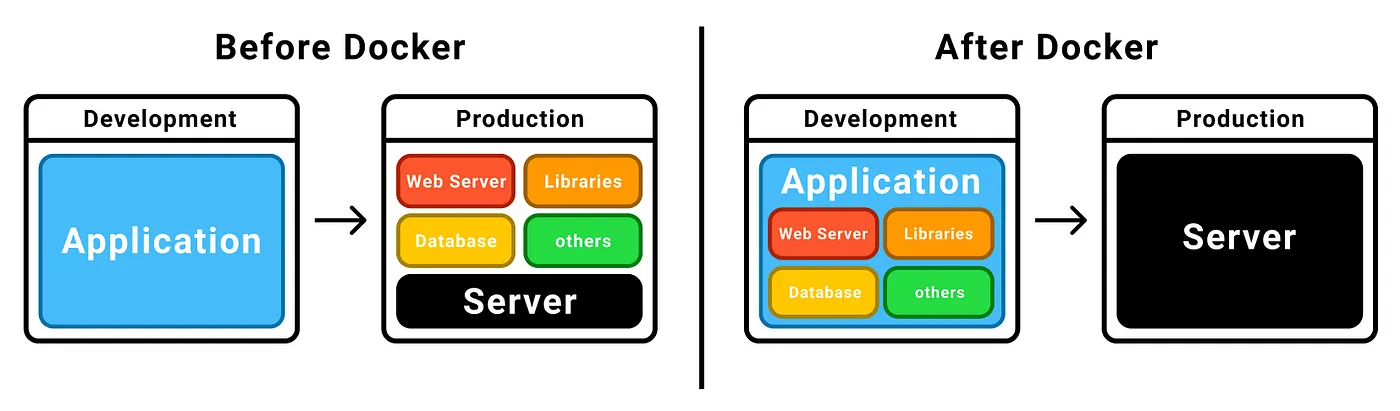

Docker's Impact on Software Development

Docker has radically transformed the software development lifecycle (SDLC) by facilitating a new development paradigm termed containerized development. With Docker, developers can work in a standardized environment that mimics the production environment, eliminating the notorious "it works on my machine" problem. It also supports microservices architecture by allowing each service to run in its own container, fostering decoupled, easier-to-manage applications.

Moreover, Docker streamlines the collaboration between different teams in an organization. Developers, testers, and operations teams can work on the same application, in the same environment, and under the same set of conditions, thereby fostering standardization, consistency, and reproducibility. This helps reduce friction and increase efficiency across the entire SDLC.

Docker as the Standard Distribution Format

Docker has positioned itself as the de facto standard for distributing software. Its containerization approach simplifies the deployment process, as Docker images serve as portable packages encompassing everything needed to run an application: the application code itself, along with its dependencies, libraries, and environment configurations.

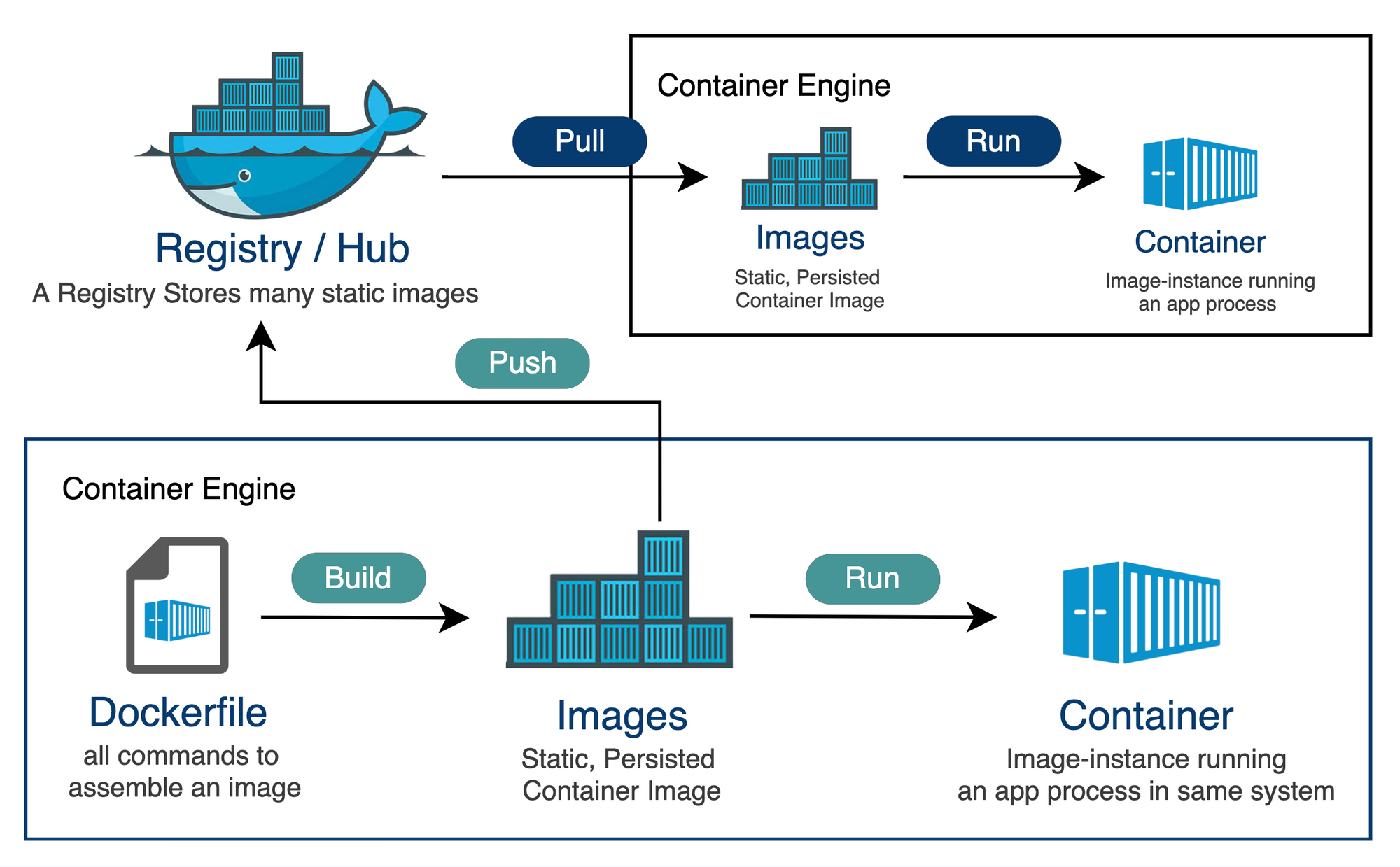

In addition, Docker Hub and Docker Registry play crucial roles in this process, acting as repositories for storing and sharing Docker images. These platforms allow developers to pull existing images and push new ones, fostering a collaborative ecosystem where pre-built Docker images for various applications and services can be shared and reused.

Alternative Container Technologies: Podman and Beyond

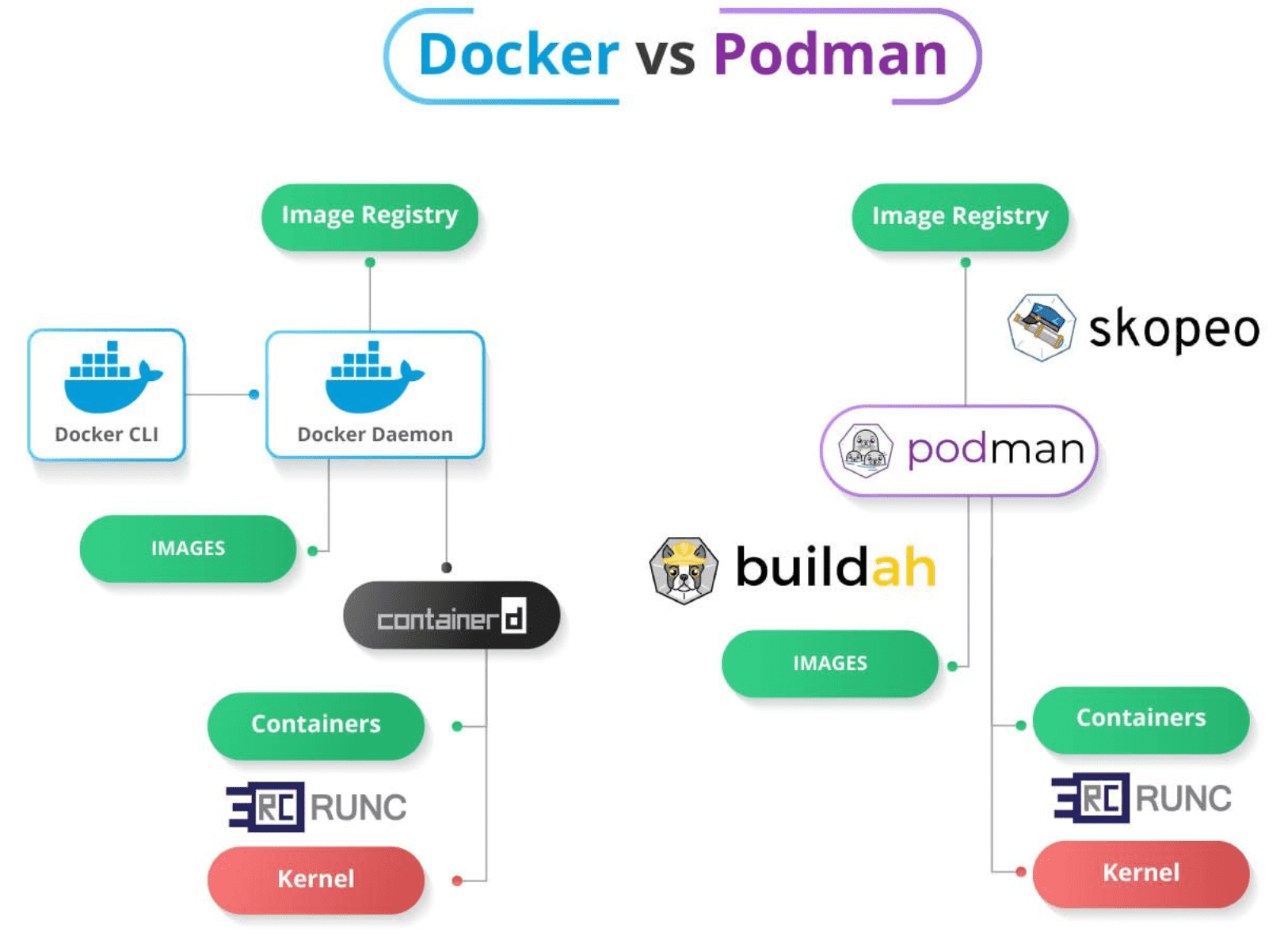

While Docker is undoubtedly a dominant player in the containerization space, other tools such as Podman, CRI-O, and rkt (deprecated) have emerged offering alternative solutions.

Podman, for instance, is notable for its daemonless architecture and its compatibility with Docker's CLI and Docker Compose. While, CRI-O is a lightweight alternative tailored specifically for Kubernetes.

Each of these alternatives offers unique features and benefits, and the choice between Docker and these alternatives depends on the specific needs and context of a given use case. However, despite the presence of these tools, Docker's extensive functionality, widespread adoption, and the robustness of its ecosystem continue to set it apart.

Docker Architecture

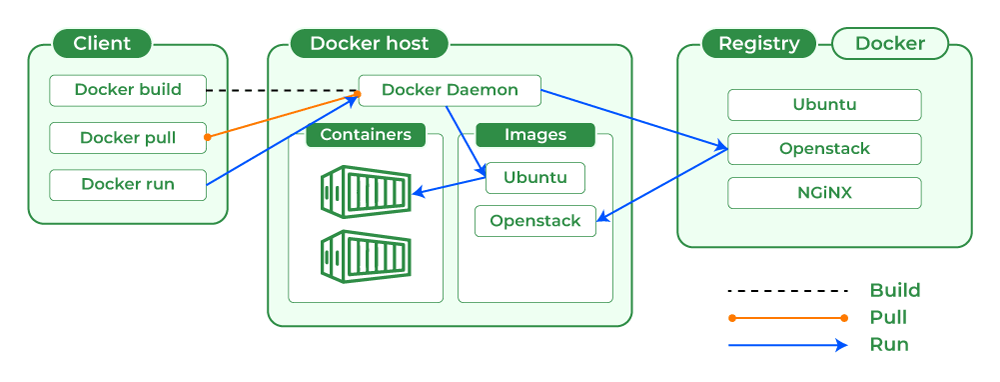

Docker's architecture comprises several key components, each of which plays a role in the containerization process. These components include the Docker Engine, Docker Images, Docker Containers, and Docker Registries.

The Docker Engine is the runtime that builds and runs Docker containers. It is composed of the Docker Daemon, which is the background service responsible for managing and executing containers, and the Docker Client, which is the command-line tool that allows users to interact with Docker.

Docker Images are read-only templates used to create containers. They are built from instructions written in a Dockerfile and can be shared and reused across different environments. Docker Registries, on the other hand, are repositories for storing and distributing Docker Images. Docker Hub is the default public registry, but users can also set up private registries for storing their images.

Docker Daemon

The Docker Daemon, also known as dockerd, is the persistent background process that manages Docker containers. It is responsible for all container-related operations and interacts with the host operating system's kernel to create, start, stop, and monitor containers.

It also manages Docker objects such as images, networks, and volumes. The Docker Daemon listens for Docker API requests and handles them accordingly. It can communicate with other Docker Daemons to manage Docker services across several hosts.

Docker Client

The Docker Client is the primary user interface to Docker. Through the Docker Client, users can interact with Docker using a command-line interface (CLI) to issue a wide variety of commands like building, running, and managing Docker containers. When a Docker command is executed, the Docker Client sends these commands to the Docker Daemon, which carries them out.

Notably, Docker provides other ways to interact with Docker as well, such as Docker Compose, a tool for defining and managing multi-container Docker applications.

Docker Registry

A Docker Registry is a repository for storing and distributing Docker Images. It plays a crucial role in the Docker ecosystem by facilitating the sharing of applications packaged as Docker images. Docker Registries can be public or private, based on the accessibility of the stored Docker images.

Docker Hub, maintained by Docker Inc., is the default public registry where users can store images and access them from any Docker host. Docker also allows users to run their own private registry. Docker Images stored in a registry are layered and versioned, ensuring efficient image storage and distribution.

Docker Networking and Storage

Networking in Docker is managed by Docker daemon which creates and maintains the network stack for containers. Docker provides several networking options to connect containers to each other and to the outside world, including bridge networks for isolated networks on a single host, overlay networks for multi-host networking in a swarm, and host networks for sharing the host's network stack.

Storage in Docker is managed by Docker Volumes, a mechanism for persisting data generated by and used by Docker containers. Docker Volumes are completely managed by Docker and are stored in a part of the host filesystem which is managed by Docker (/var/lib/docker/volumes/ on Linux).

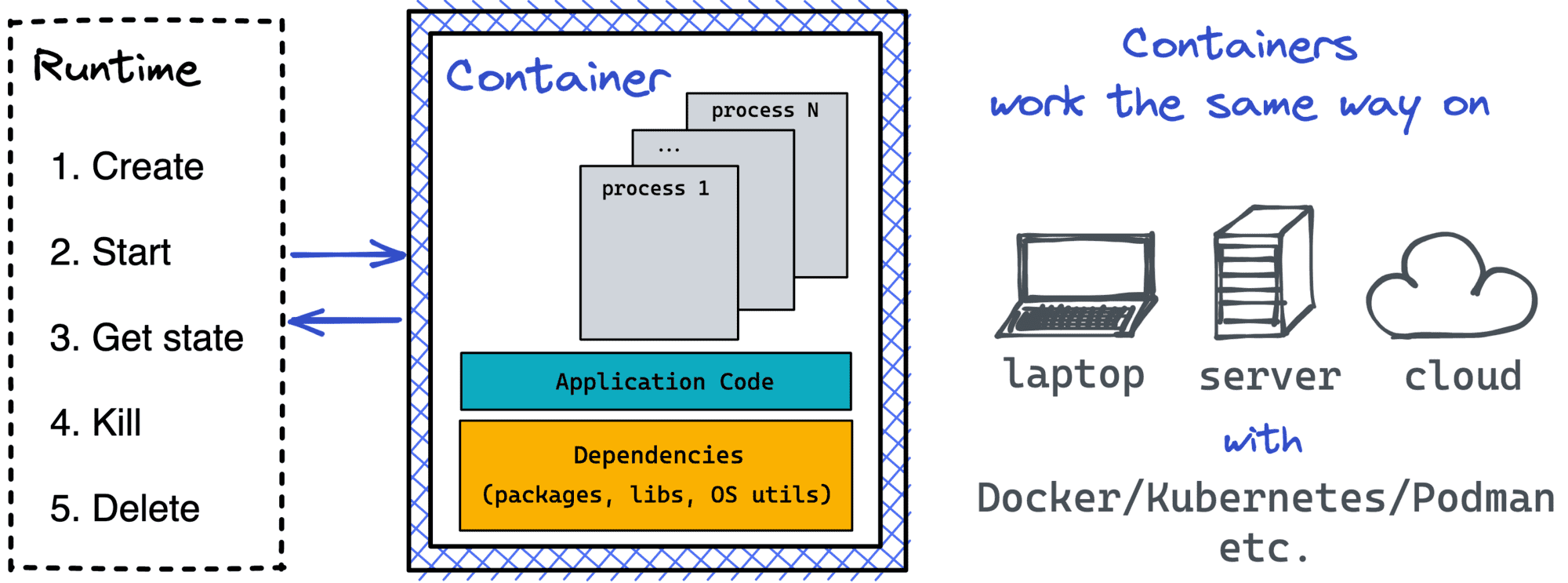

Container Runtime

The container runtime within Docker is responsible for executing containers by coordinating with the host operating system's kernel. It manages the lifecycle of a container, from its creation to its deletion, and handles the allocation of system resources like CPU and memory.

Docker initially used its own container runtime, but in line with the efforts of the Open Container Initiative (OCI), Docker adopted runc, a lightweight, universal container runtime. It also created containerd, a higher-level container runtime with more features, as a standalone project.

Containerization Process

The containerization process in Docker begins with a Dockerfile. A Dockerfile is a text document that contains all the instructions to build a Docker Image. It specifies the base image to start with, the software dependencies to install, the application code to include, and the commands to run at startup.

Once a Dockerfile is defined, Docker builds an image using the docker build command. The image is tagged with a name and version and then can be run as a container using the docker run command. After testing and verifying the container, the Docker image can be pushed to a Docker Registry using the docker push command for storage and distribution.

About 8grams

We are a small DevOps Consulting Firm that has a mission to empower businesses with modern DevOps practices and technologies, enabling them to achieve digital transformation, improve efficiency, and drive growth.

Ready to transform your IT Operations and Software Development processes? Let's join forces and create innovative solutions that drive your business forward.

Subscribe to our newsletter for cutting-edge DevOps practices, tips, and insights delivered straight to your inbox!