Cost Savings on the Cloud

The cloud has revolutionized the way businesses deploy and scale their applications, but as usage grows, so do the costs. One of the key aspects that many companies overlook is the optimization of cloud resources and pricing models.

Introduction

The cloud has revolutionized the way businesses deploy and scale their applications, but as usage grows, so do the costs. One of the key aspects that many companies overlook is the optimization of cloud resources and pricing models. While cloud services offer flexibility and scalability, they can quickly become expensive if not managed effectively. Fortunately, by implementing a few strategic practices and using cloud features more efficiently, organizations can significantly reduce their cloud expenses without compromising performance.

In this article, we’ll explore several strategies that can help you save money on the cloud. From choosing the right instance types to optimizing storage costs, we’ll cover the most effective ways to keep your cloud bill under control.

Understanding Cloud Pricing and Resource Usage

Cloud providers such as AWS, Microsoft Azure, and Google Cloud use complex pricing models based on resource consumption. Whether it's compute power, storage, or networking, the more resources you use, the more you pay. The key to saving money lies in understanding these pricing models and adjusting your usage to fit your actual needs.

Cloud pricing is typically divided into three primary categories:

- On-demand pricing – This is the pay-as-you-go model where you are billed for the resources you use in real-time. While flexible, on-demand pricing can quickly become expensive if you over-provision resources or leave them running unnecessarily.

- Reserved instances – Reserved instances allow you to commit to a certain level of usage over a one- or three-year term in exchange for a discounted rate. These are ideal for workloads that are predictable and steady.

- Spot/Pre-emptible instances – These are short-term, low-cost compute resources that cloud providers offer when there is excess capacity. These instances can be interrupted at any time, so they are best suited for non-critical workloads.

The challenge is that, without careful management, you can easily end up over-allocating resources and incurring unnecessary costs. This is where proactive cost management and resource optimization come into play.

Use Spot and Pre-emptible Instances

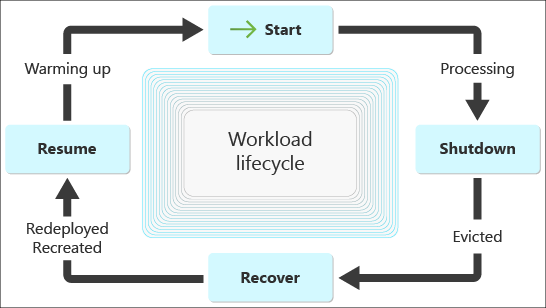

One of the most effective ways to save money on cloud computing is to take advantage of spot or pre-emptible instances. These instances offer significant savings compared to regular on-demand instances, sometimes by up to 90%. However, they come with the caveat that the cloud provider can terminate them at any time with little notice.

What Are Spot and Pre-emptible Instances?

Spot instances (AWS) and pre-emptible VMs (Google Cloud) are essentially unused capacity that cloud providers offer at a fraction of the cost. These resources are cheaper because they are less reliable—they can be terminated when the provider needs the capacity back. For example, AWS can terminate your spot instance if demand for resources increases, giving you little or no notice.

When to Use Spot Instances

Spot instances are ideal for non-mission-critical applications and workloads that are fault-tolerant. These might include batch processing, data analysis, and certain testing environments. As long as you can handle interruptions and restart processes when an instance is terminated, the cost savings can be substantial.

For example, in a machine learning project, you may use spot instances for training models that are not time-sensitive. If the instance is terminated, the training process can resume on another instance with minimal disruption.

When Not to Use Spot Instances

Spot instances are not suitable for workloads requiring high availability or zero downtime. If your application is time-sensitive (e.g., real-time transaction processing), the potential for interruptions may outweigh the cost savings.

Start with the Smallest Size and Scale as Needed

One of the most common mistakes when migrating to the cloud is over-provisioning resources at the start. Cloud providers offer a wide variety of instance types with varying levels of compute power, memory, and storage. It’s easy to assume that bigger is always better, but this can quickly lead to unnecessary expenses.

Initial Sizing for Cloud Resources

The best approach is to start with the smallest instance size that meets your requirements. For example, when setting up a database, instead of choosing a large 100GB instance from the start, begin with a smaller 10GB instance. Monitor the performance and usage over time, and then scale up as necessary.

Cloud environments are flexible, so there is no need to overestimate your needs upfront. Starting small allows you to pay only for the resources you are actually using and adjust later as your application grows.

Monitoring and Adjusting Usage

Cloud providers offer tools to help you monitor resource usage and optimize performance. For instance, AWS CloudWatch, Azure Monitor, and Google Cloud Operations Suite provide insights into the utilization of instances and other resources. By continuously tracking usage, you can avoid over-provisioning and scale resources dynamically based on actual demand.

Eliminate Unused Environments (Dev/QA)

Development and testing environments are essential for any software lifecycle, but many organizations fail to decommission these environments when they are not needed, which results in wasted cloud resources and unnecessary costs.

The Hidden Cost of Idle Environments

Running multiple environments like development (dev) and quality assurance (QA) 24/7 can lead to substantial costs, especially if these environments are not used continuously. For example, a team may leave dev and QA environments running overnight or during weekends, accumulating charges for resources that are not actively being used.

Automating Environment Management with Infrastructure as Code (IaC)

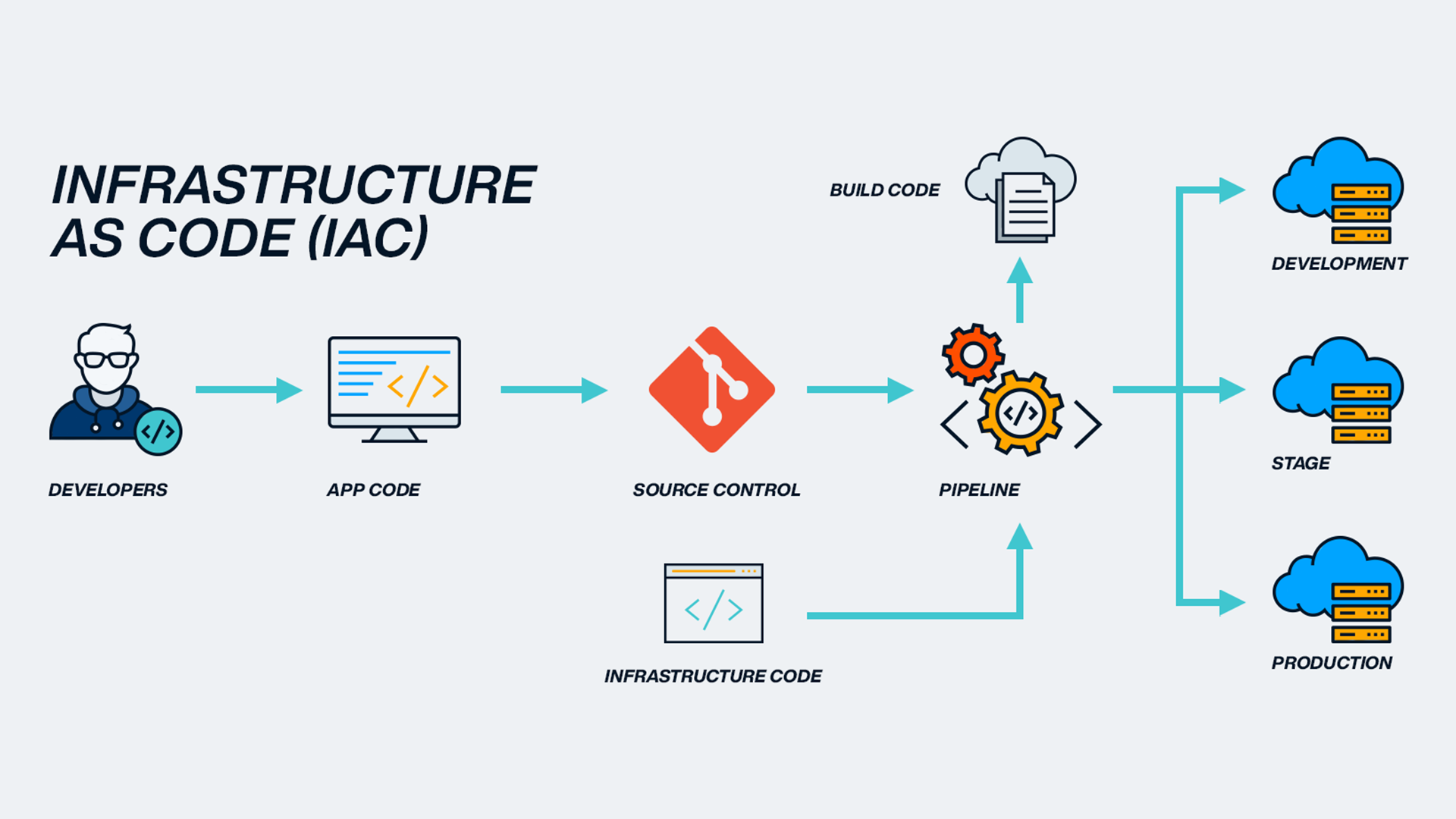

One of the most effective ways to manage these environments and reduce costs is through the use of Infrastructure as Code (IaC). IaC allows you to define, deploy, and manage infrastructure programmatically. By automating environment provisioning and de-provisioning, you can ensure that dev and QA environments are only running when needed.

Tools like Terraform, AWS CloudFormation, and Google Cloud Deployment Manager allow you to create scripts that automatically spin up environments when needed (e.g., during working hours) and tear them down when not in use (e.g., at night or weekends).

Example: Automating Dev/QA Environments

Let’s say your development team works Monday to Friday, 9 AM to 5 PM. With IaC, you can schedule the destruction of the dev and QA environments during off-hours and bring them back online the next morning. This strategy can save substantial amounts by ensuring that resources are only running when they are actually being used.

By eliminating unused environments, you can significantly reduce cloud costs and focus your budget on production workloads that require continuous availability.

Standardize Architecture Patterns with IaC

Another key strategy to avoid unnecessary cloud costs is to standardize your cloud infrastructure using Infrastructure as Code (IaC). Standardizing the architecture ensures that resources are provisioned with consistent, optimized configurations and prevents the accidental creation of resource-heavy instances or misconfigurations that lead to cost spikes.

Consistency and Cost Control

By using IaC to standardize your cloud architecture, you ensure that all environments (dev, test, production) follow a predefined, cost-efficient blueprint. This approach helps eliminate the risk of over-provisioning or creating non-optimal configurations.

For example, instead of allowing developers to choose the instance size for each new application, you can enforce a policy using IaC that automatically provisions smaller, cost-effective instance types unless there is a valid reason for a larger instance.

Avoiding Cost Spikes

Misconfigurations or the use of inefficient resources can lead to unexpected cost increases. For instance, a misconfigured instance type or an environment that is accidentally over-provisioned can result in a significant price spike. By standardizing the architecture with IaC, you reduce the likelihood of such mistakes, ensuring that resources are consistently aligned with actual needs and budget requirements.

Leverage Autoscaling for Cost Efficiency

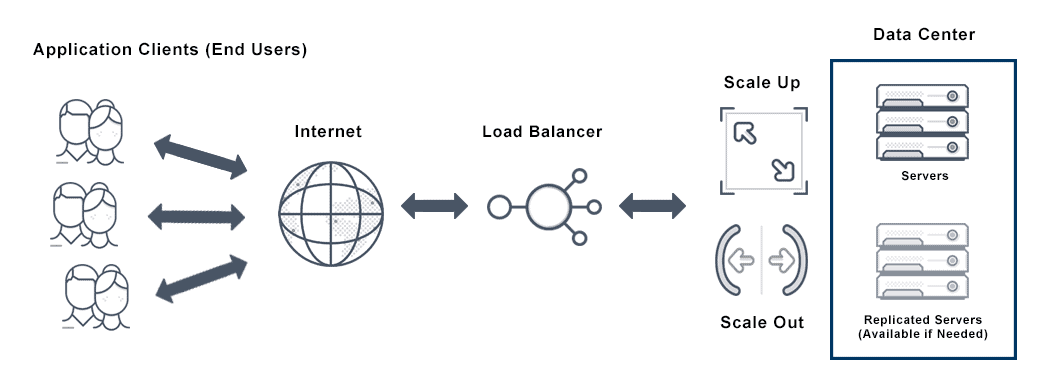

In a traditional static infrastructure setup, organizations provision a fixed amount of resources based on expected usage. However, this approach often leads to over-provisioning, where resources are underutilized during periods of low demand. On the cloud, a far more efficient way to manage resource allocation is by using autoscaling.

What Is Autoscaling?

Autoscaling refers to the automatic adjustment of resources in real-time based on the demand or load of your application. For instance, with AWS Auto Scaling, Google Cloud Autoscaler, or Azure Scale Sets, your compute instances can automatically increase or decrease in number to handle varying workloads.

Autoscaling ensures that you have enough resources to meet demand during traffic spikes, but it also helps you scale down during periods of low usage, reducing the number of idle resources that are still consuming your budget. Instead of manually resizing instances or leaving extra capacity running, autoscaling dynamically adjusts the infrastructure based on real-time needs.

Benefits of Autoscaling

The main benefit of autoscaling is its ability to optimize resource usage and costs. By scaling up only when needed, and scaling down when demand decreases, you avoid paying for unused capacity. This is especially important in environments where usage can fluctuate, such as e-commerce sites, media streaming services, or applications with unpredictable workloads.

Additionally, autoscaling improves performance by allocating resources based on actual demand. If traffic suddenly spikes, autoscaling adds more instances to handle the increased load. Conversely, when demand drops, autoscaling reduces resources, allowing you to pay for only what you need.

Cost Impact

The most immediate financial benefit of autoscaling is its ability to avoid over-provisioning. For example, if you have an application that typically experiences high traffic during the day but sees low traffic overnight, autoscaling can ensure that only the required number of instances are running during peak hours. During off-peak times, it will automatically reduce the number of active instances, lowering costs significantly.

Optimize Cloud Storage and Data Management

Data storage is one of the most significant contributors to cloud computing costs, especially as data grows rapidly in modern applications. Fortunately, cloud providers offer a range of storage options with different pricing tiers, and understanding how to optimize storage management can lead to substantial savings.

Storage Policies for Cost Reduction

One of the most effective strategies for saving money on cloud storage is by utilizing different storage classes or tiers based on how frequently data is accessed. For example, AWS offers multiple storage tiers for S3 buckets, including Standard, Intelligent-Tiering, and Glacier. The standard tier is optimized for frequently accessed data, while Glacier is designed for archival data that is infrequently accessed.

By moving older, infrequently accessed data to a cheaper tier, you can dramatically reduce your storage costs. The same applies to other cloud providers like Google Cloud Storage (Standard, Nearline, Coldline) or Azure Blob Storage (Hot, Cool, Archive).

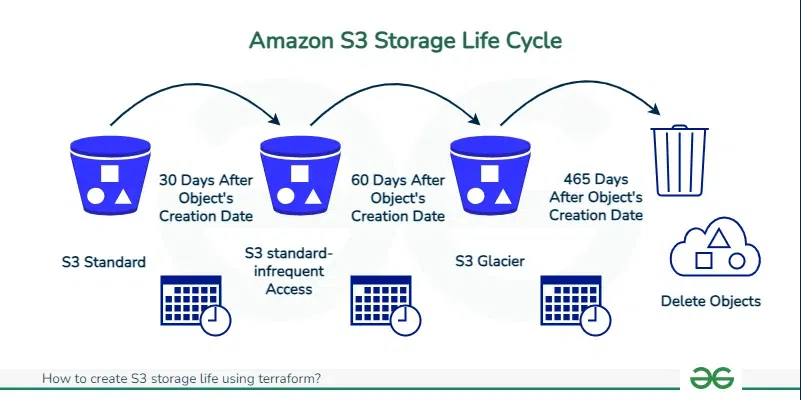

Lifecycle Policies for Data Management

Setting up lifecycle policies is another crucial aspect of storage optimization. Cloud providers allow you to define rules that automatically transition data between different storage classes based on its age, access frequency, or other criteria. For example, you can set a policy to automatically archive data that has not been accessed in the last 30 days or move it to a cheaper storage class after a set period.

Lifecycle management can be especially useful in scenarios where you need to store large amounts of data for compliance or regulatory reasons, but don’t need it to be immediately accessible. Automating this process ensures that you’re not paying for high-cost storage when a lower-cost alternative will suffice.

Cost Implications

The financial impact of optimizing storage is substantial. If you’re storing large volumes of data, moving it to cheaper tiers can result in savings that scale with your storage needs. Additionally, automating the movement of data with lifecycle policies ensures you are not overlooking data that can be archived or deleted, which could otherwise increase storage costs unnecessarily.

Right-Sizing Resources Continuously

Cloud providers give you the ability to choose from a wide variety of instance types and sizes, but not every instance is suited for every workload. Right-sizing refers to selecting the most appropriate instance type and size based on your application’s actual usage. Over time, you may discover that your initial resource allocation was too large or too small.

Automated Right-Sizing Tools

Fortunately, cloud providers offer automated tools to help you right-size your resources based on usage data. For instance, AWS Compute Optimizer, Google Cloud Recommender, and Azure Advisor provide insights into your instance utilization and recommend resizing opportunities. These tools analyze how your instances are performing and suggest smaller, more cost-effective instance types if you are consistently underutilizing resources.

Periodic Review and Adjustment

Right-sizing should be an ongoing process. As workloads change, so do resource requirements. A process that worked a few months ago may not be the best option now. To ensure optimal cost efficiency, it is important to periodically review your instances and storage configurations. By doing so, you can identify resources that have become oversized or that can be downscaled without sacrificing performance.

This strategy also applies to databases. Many organizations over-provision database storage and compute power during the initial setup, only to find that their database usage is much lower than expected. By right-sizing your database resources (whether it's adjusting the CPU, memory, or storage), you can optimize costs without compromising on functionality.

Consolidate Billing and Use Reserved Instances

Cloud providers offer discounts to customers who commit to using a certain amount of resources over an extended period, usually one or three years. These discounts are especially valuable for workloads that are predictable and long-term. Two strategies to consider are consolidating billing across multiple accounts and using reserved instances for consistent workloads.

Consolidating Accounts for Volume Discounts

If your organization has multiple cloud accounts, consider consolidating your billing. Cloud providers often offer volume discounts or better pricing for consolidated billing, as you aggregate your usage across accounts. This can be particularly useful for large enterprises with several departments or teams using cloud services.

For instance, AWS offers Consolidated Billing through its AWS Organizations feature, allowing you to aggregate usage across multiple AWS accounts and apply volume discounts. Azure and Google Cloud provide similar billing consolidation features, which can lead to significant savings, especially as your cloud usage scales.

Reserved Instances for Predictable Workloads

Reserved instances provide another opportunity to lower cloud costs. When you have workloads that are predictable and run continuously (e.g., production applications or databases), committing to reserved instances can save you up to 75% compared to on-demand prices. Reserved instances work by offering a discount in exchange for a one- or three-year commitment to a specific instance type and region.

By calculating the total cost of ownership and the expected duration of your workloads, you can determine if reserved instances are a good option. They are typically most effective when your resource usage is stable and doesn’t fluctuate dramatically over time.

Use Cloud Cost Allocation Tags

Cloud cost allocation tags are metadata that you can attach to your cloud resources (such as virtual machines, storage, and databases). These tags allow you to track your cloud resources by department, project, environment, or any other dimension that is important to your organization.

Tagging Resources for Better Visibility

By implementing a robust tagging strategy, you can break down your cloud costs across various dimensions. For instance, you can tag resources based on the project name, application, team, or environment (e.g., dev, test, production). This visibility helps identify areas where costs are unnecessarily high or where resources are underutilized.

Optimize Based on Tagged Data

Once you have a clear view of how resources are being used, you can make more informed decisions. For example, if you notice that a particular project or environment is consistently over-provisioned, you can adjust the resources accordingly. Tags also help in cost forecasting, as you can track historical usage trends by resource or project.

Networking and Data Transfer Optimization

Data transfer is often one of the hidden costs in cloud computing. Whether it’s inter-region traffic or outbound data sent to the internet, the cost of data transfer can add up quickly if not managed correctly.

Minimize Data Transfer Costs

Data transfer costs are often tied to geographic regions. For example, transferring data between AWS regions can incur significant charges. To minimize these costs, it’s essential to optimize how you distribute your resources. For instance, try to keep all your resources within the same region when possible, especially for high-traffic applications. Also, be mindful of the data transfer costs between your on-premise infrastructure and the cloud, as this can become costly as well.

Edge Computing for Latency-Sensitive Applications

For applications that require low latency, such as real-time analytics or content delivery, edge computing can be a valuable approach. By placing resources closer to end-users or devices, you can reduce the load on central cloud data centers and minimize the associated transfer costs. Many cloud providers offer edge services that allow you to deploy applications or services in geographically distributed locations to reduce latency and costs.

Monitor and Track Cloud Spending Regularly

The most important factor in effectively managing cloud costs is constant monitoring. Cloud environments are dynamic, and without regular oversight, it's easy to overlook inefficiencies and cost overruns. Luckily, cloud providers offer powerful tools to help you keep track of your spending, forecast costs, and identify areas for optimization.

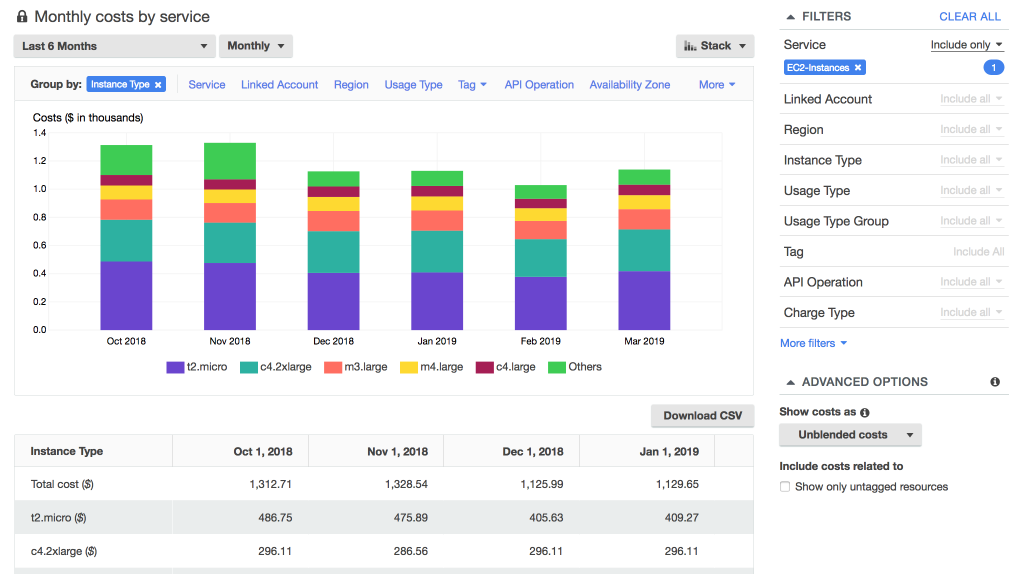

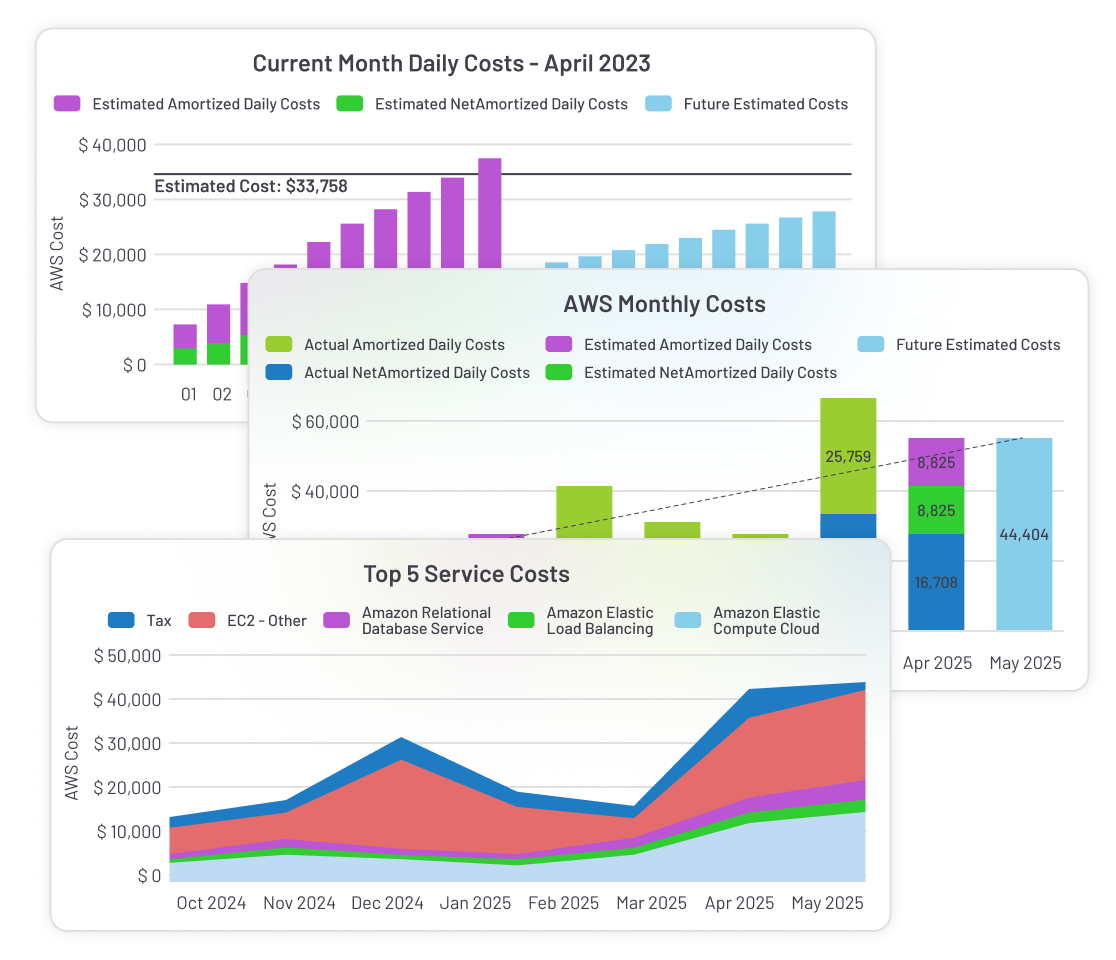

Set Up Cloud Cost Management Tools

Every major cloud provider has built-in tools to help you monitor and manage costs. For example, AWS offers AWS Cost Explorer and AWS Budgets, Azure provides Azure Cost Management and Billing, and Google Cloud has Google Cloud Billing and Cost Management tools. These tools allow you to view your cloud usage and spending in real-time, track usage patterns, and generate cost forecasts.

These tools also provide detailed reports, breaking down costs by project, department, resource type, or tag. This granularity enables you to easily spot areas where expenses are higher than expected and where optimization may be needed. For example, if you notice an unexpected spike in costs for a particular service, you can quickly investigate and take corrective action.

Budgeting and Alerts

A key feature of cloud cost management tools is the ability to set budgets and alerts. With these features, you can define spending limits for different projects or teams and receive notifications when your spending approaches or exceeds those limits. Setting up alerts ensures that you are not caught off guard by unexpected charges and gives you a chance to take action before costs spiral out of control.

By regularly reviewing spending reports and setting proactive budget alerts, you can better control costs and ensure that you're staying within your financial goals.

Optimize Continuously

Cloud optimization is not a one-time task but an ongoing process. As your workloads evolve, your resource needs and usage patterns will change. By regularly reviewing your cloud costs, revisiting your architecture, and adjusting resource configurations, you can ensure that your cloud infrastructure remains cost-effective as your business scales.

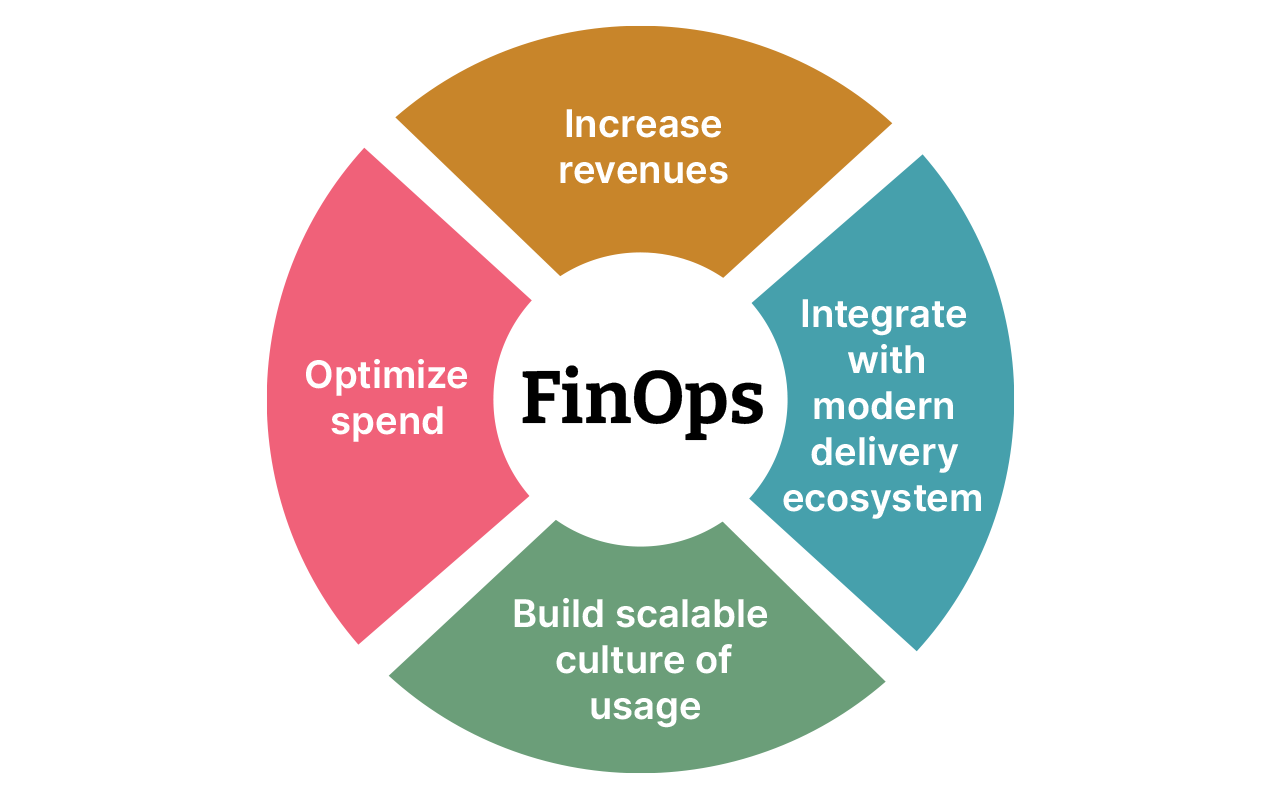

In addition to monitoring toolsets, many organizations employ cloud FinOps (Cloud Financial Operations) practices. FinOps is a collaborative approach to managing cloud financials that involves continuous communication between finance, operations, and development teams. This ensures alignment between cost goals and the implementation of cost-saving practices at every level of the organization.